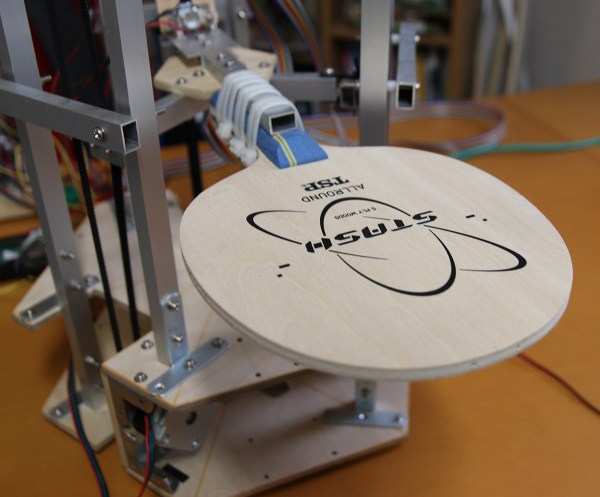

There aren’t too many sports named for the sound that is produced during the game. Even though it’s properly referred to as “table tennis” by serious practitioners, ping pong is probably the most obvious. To that end, [Nekojiru] built a ping pong ball juggling robot that used those very acoustics to pinpoint the location of the ball in relation to the robot. Not satisfied with his efforts there, he moved onto a visual solution and built a new juggling rig that uses computer vision instead of sound to keep a ping pong ball aloft.

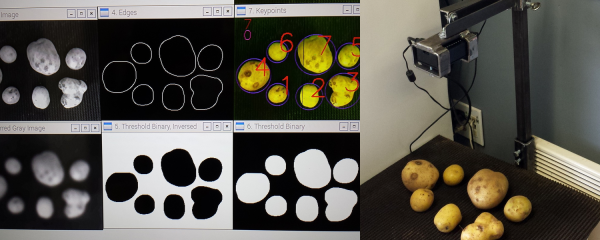

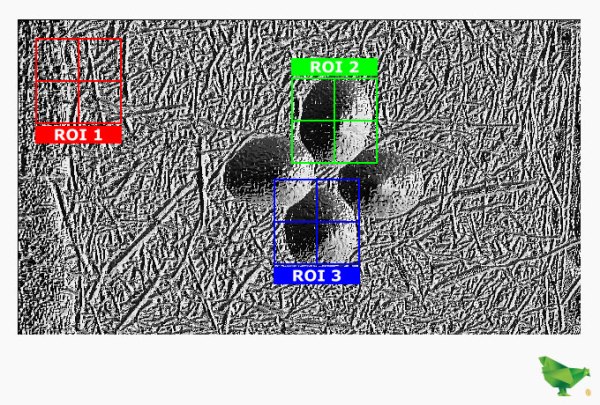

The main controller is a Raspberry Pi 2 with a Pi camera module attached. After some mishaps with the planned IR vision system, [Nekojiru] decided to use green light to illuminate the ball. He notes that OpenCV probably wouldn’t have worked for him because it’s not fast enough for the 90 fps that’s required to bounce the ping pong ball. After looking at the incoming data from this system, an algorithm extracts 3D information about the ball and directs the paddle to strike the ball in a particular way.

If you’ve ever wanted to get into real-time object tracking, this is a great project to look over. The control system is well polished and the robot itself looks almost professionally made. Maybe it’s possible to build something similar to test [Nekojiru]’s hypothesis that OpenCV isn’t fast enough for this. If you want to get started in that realm of object tracking, there are some great projects that make use of that piece of software as well.