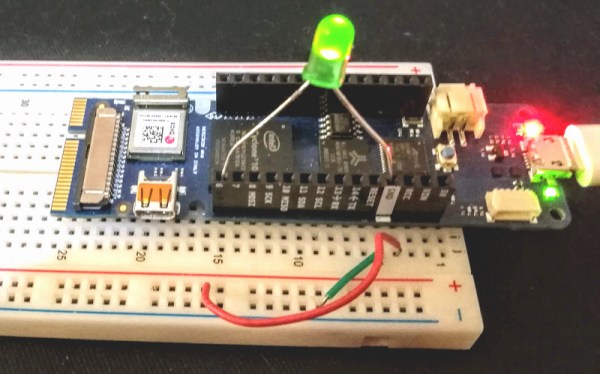

Last time I talked about how I took the open source Verifla logic analyzer and modified it to have some extra features. As promised, this time I want to show it in action, so you can incorporate it into your own designs. The original code didn’t actually capture your data. Instead, it created a Verilog simulation that would produce identical outputs to your FPGA. If you were trying to do some black box simulation, that probably makes sense. I just wanted to view data, so I created a simple C program that generates a VCD file you can read with common tools like gtkwave. It is all on GitHub along with the original files, even though some of those are not updated to match the new code (notably, the PDF document and the examples).

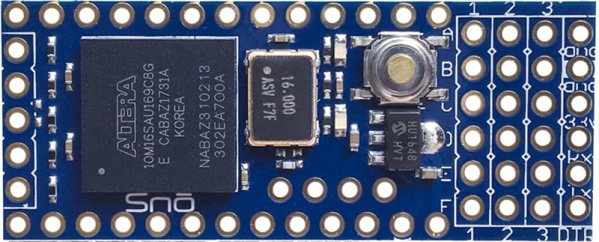

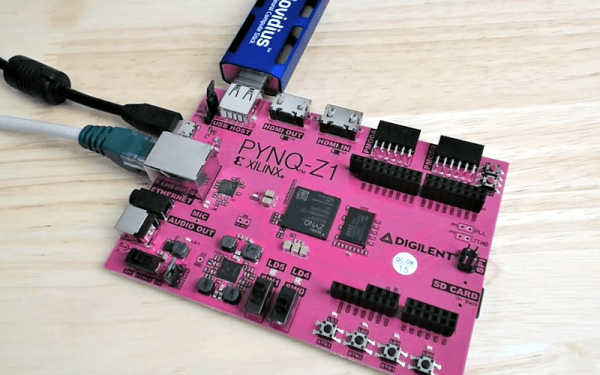

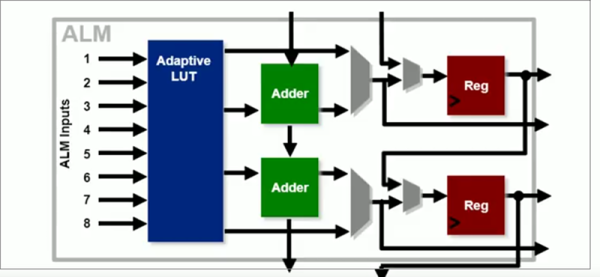

If you have enough pins, of course, you can use an external logic analyzer. If you have enough free space on the FPGA, you could put something like SUMP or SUMP2 in your design which would be very flexible. However, since these analyzers are made to be configurable from the host computer, they probably have a lot of circuitry that will compete with yours for FPGA space. You configure Verifla at compile time which is not as convenient but lets it have a smaller footprint.