Hacker uses pineapple on unencrypted WiFi. The results are shocking! Film at 11.

Right on, we’ve got some 3D printing cons coming up. The first is MRRF, the Midwest RepRap Festival. It’s in Goshen, Indiana, March 23-25th. It’s a hoot. Just check out all the coverage we’ve done from MRRF over the years. Go to MRRF.

We got news this was going to happen last year, and now we finally have dates and a location. The East Coast RepRap Fest is happening June 22-24th in Bel Air, Maryland. What’s the East Coast RepRap Fest? Nobody knows; this is the first time it’s happening, and it’s not being produced by SeeMeCNC, the guys behind MRRF. There’s going to be a 3D printed Pinewood Derby, though, so that’s cool.

జ్ఞా. What the hell, Apple?

Defcon’s going to China. The CFP is open, and we have dates: May 11-13th in Beijing. Among the things that may be said: “Hello Chinese customs official. What is the purpose for my visit? Why, I’m here for a hacker convention. I’m a hacker.”

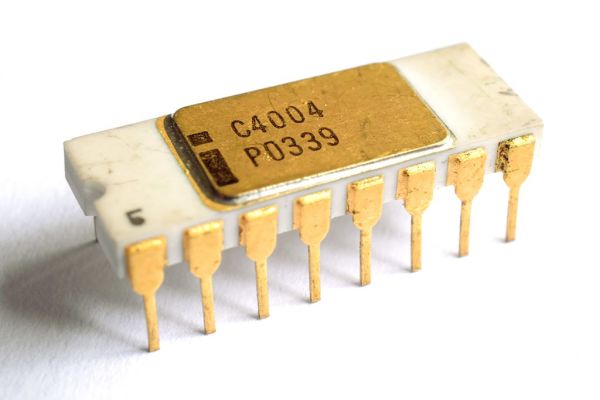

Intel hit with lawsuits over security flaws. Reuters reports Intel shareholders and customers had filed 32 class action lawsuits against the company because of Spectre and Meltdown bugs. Are we surprised by this? No, but here’s what’s interesting: the patches for Spectre and Meltdown cause a noticeable and quantifiable slowdown on systems. Electricity costs money, and companies (server farms, etc) can therefore put a precise dollar amount on what the Spectre and Meltdown patches cost them. Two of the lawsuits allege Intel and its officers violated securities laws by making statements or products that were false. There’s also the issue of Intel CEO Brian Krzanich selling shares after he knew about Meltdown, but before the details were made public. Luckily for Krzanich, the rule of law does not apply to the wealthy.

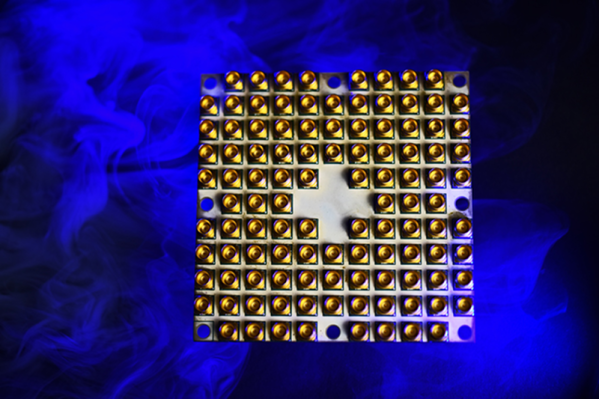

What does the Apollo Guidance Computer look like? If you think it has a bunch of glowey numbers and buttons, you’re wrong; that’s the DSKY — the user I/O device. The real AGC is basically just two 19″ racks. Still, the DSKY is very cool and a while back, we posted something about a DIY DSKY. Sure, it’s just 7-segment LEDs, but whatever. Now this project is a Kickstarter campaign. Seventy bucks gives you the STLs for the 3D printed parts, BOM, and a PCB. $250 is the base for the barebones kit.