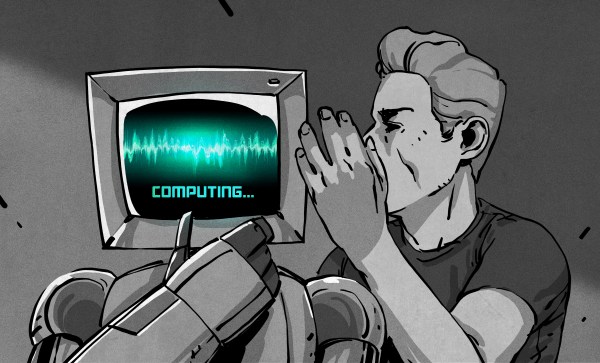

One of the most common uses of neural networks is the generation of new content, given certain constraints. A neural network is created, then trained on source content – ideally with as much reference material as possible. Then, the model is asked to generate original content in the same vein. This generally has mixed, but occasionally amusing, results. The team at [Made by AI] had a go at generating Christmas songs using this very technique.

The team decided that the easiest way to train their model would be to use note data from MIDI files. MIDI versions of Christmas songs are readily available and provide a broad base with which to train the model. For a neural network, the team chose to use a Long-short Term Memory (LSTM) architecture. This is a model which is contextually sensitive, which is important when dealing with structured formats like music or language.

The neural network generated five tunes which you can listen to on the Made by AI Soundcloud page. The team notes their time was limited, and we think that with some further work and more adherence to musical concepts such as structure and repetition, it might be possible to generate something a little more catchy.

There are other applications for AI in music, too – like these intelligent musical prostheses.