The Hack-a-Day logo challenge keeps on bearing fruit. This tip comes from [Enrico Lamperti] from Argentina who posted his follies as well as success creating a Hack-a-Day logo using a home built scanning laser projector.

The build consists of a couple small servos, a hacked up pen laser and an Arduino with some stored coordinates to draw out the image. As usual the first challenge is powering your external peripheral devices like servos. [Enrico] tackled this problem using 6 Ni-MH batteries and an LM2956 simple switcher power converter. The servos and Arduino get power directly from the battery pack and the Arduino controls the PWM signals to the servos as they trace out the stored coordinate data. The laser is connected to the servo assembly and is engaged and powered by an Arduino pin via an NPN transistor. He also incorporated a potentiometer to adjust the servo calibration point.

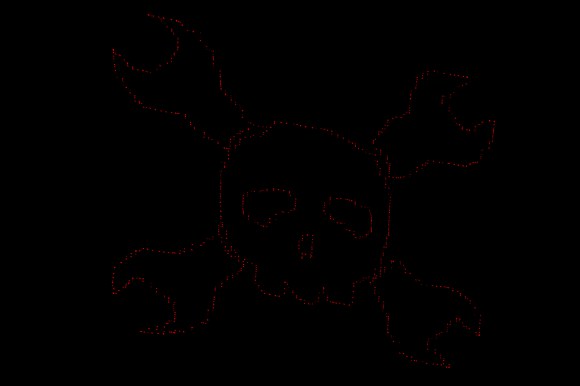

His first imported coordinate data generated from some Python script was not very successful. But later he used processing with an SVG file to process a click-made path the Arduino could use as map data to draw the Hack-a-Day logo. It requires a long exposure time to photograph the completed drawing in a dark room but the results are impressive.

It’s an excellent project where [Enrico] shares what he learned about using Servo.writeMicroseconds() instead of Servo.write() for performance along with several other tweaks. He also shared the BOM, Fritzing diagram, Processing Creator and Simulator tools and serial commands on GitHub. He wraps up with some options that he thinks would improve his device, and he requests any help others may want to provide for better performance. And if you want you could step it up a notch and create a laser video projector with an ATMega16 AVR microcontroller and some clever spinning tilted mirrors.