[Dann Albright] writes about some small experiments he’s done in home security.

He starts with the simplest. Which is to purchase an off the shelf web camera, and hook it up to software built to do the task. The first software he uses is the free, iSpy open source software. This adds basic features like motion detection, time stamping, logging, and an interface. He also explores other commercial options.

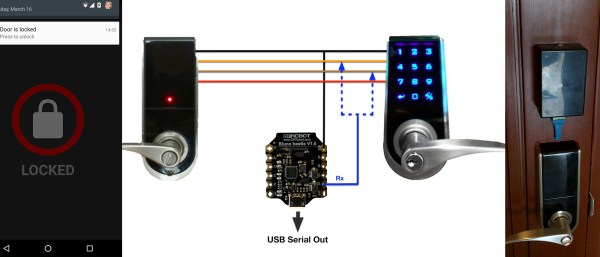

Next he delves a bit deeper. He starts by making a simple motion detector. When the Arduino detects motion using a PIR sensor it gets a computer to text an alert. After the tutorial begins to veer a little and he adds his WiFi light bulbs to the mix. Now he can send an email and change the color of the lights.

We suppose, that from a security standpoint. It would really freak a burglar out if all the lights turned red when they walked into a room. Either way, there’s definitely a fun weekend project in playing around with all these systems.