[Michael Wessel] found some of his old DOS 3D graphics software and tried to run it on an 8088 PC. The tale of adding an 8087 co-processor to speed up the rendering was anything but straightforward, resulting in a useful little project.

There was a point around the end of the 1980s when the world of PCs had moved on to the 386, but the humble 8086 and 8088 hung around at the consumer end of the market. For Europeans that meant a variety of non-standard machines with brand names such as Amstrad and Schneider, and even surprisingly, later on Sinclair and Commodore too.

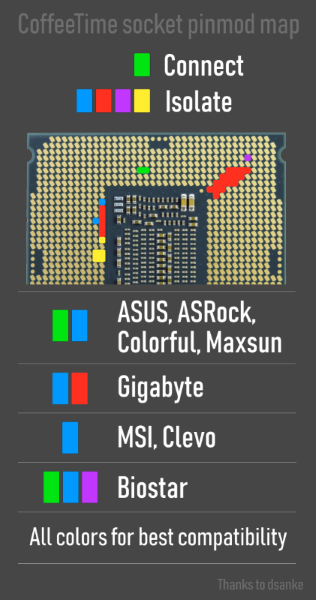

Of these the Schneider Euro PC was an all-in-one design reminiscent of an Amiga or Atari ST, packing a serviceable 8088 PC with a single 3.5″ floppy drive. A cheap machine like this was never thought to need an 8087, and lacked the usual socket on the motherboard, so he made a small PCB daughter board for the 8088 socket with space for both chips.

It’s a surprisingly simple circuit, as obviously the two chips were meant to exist together. It certainly had the desired effect on his frame rate, though we’re not sure how many other Euro PC users will need it. It does make us curious though, as to how quickly a modern microcontroller could emulate an 8087 for an even faster render time. Meanwhile if you’re curious about the 8087, of course [Ken Shirriff] has taken a look at it.