Recently Radxa released the X4, which is an SBC containing not only an N100 x86_64 SoC but also an RP2040 MCU connected to a Raspberry Pi-style double pin header. The Intel N100 is one of a range of Alder Lake-N SoCs which are based on a highly optimized version of the Skylake core, first released in 2015. These cores are also used as ‘efficiency’ cores in Intel’s desktop CPUs. Being x86-based, this means that the Radxa X4 can run any Linux, Windows and other OS from either NVMe (PCIe 3.0 x4) or eMMC storage. After getting his hands on one of these SBCs, [Bret] couldn’t wait to take a gander at what it can do.

Installing Windows 11 and Debian 12 on a 500 GB NVMe (2230) SSD installed on the X4 board worked pretty much as expected on an x86 system, with just some missing drivers for the onboard Intel 2.5 Gbit Ethernet and WiFi, depending on the OS, but these were easily obtained via the Intel site and installed. The board comes with an installed RTC battery and a full-featured AMI BIOS, as well as up to 16 GB of LPPDR5 RAM.

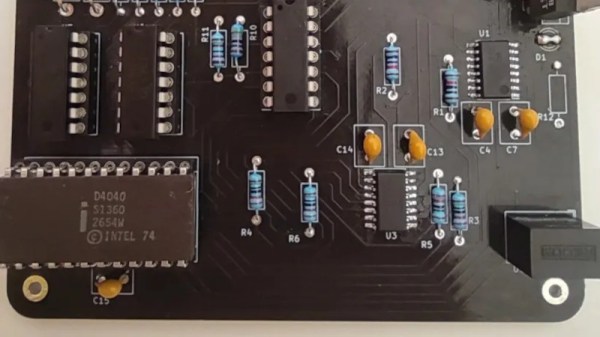

Using the system with the Radxa PoE+ HAT via the 2.5 Gbit Ethernet port also worked a treat once using a quality PoE switch, even with the N100’s power level set to 15 Watt from the default 6. The RP2040 MCU on the mainboard is connected to the SoC using both USB 2.0 and UART, according to the board schematic. This means that from the N100 all of the Raspberry Pi-style pins can be accessed, making it in many ways a more functional SBC than the Raspberry Pi 5, with a similar power envelope and cost picture.

At $80 USD before shipping for the 8 GB (no eMMC) version that [Bret] looked at one might ask whether an N100-based MiniPC could be competitive, albeit that features like PoE+ and integrated RPi-compatible header are definite selling points.