For over a hundred years, good typists didn’t ‘hunt and peck’ but instead relied on keeping their fingers on the home row. This technique relies on physical buttons, but with on-screen keyboards used on tablets and other touch screen devices touch typists have a very hard time. [Zach] is working on a new project to bring a chorded keyboard to these devices called ASETNIOP.

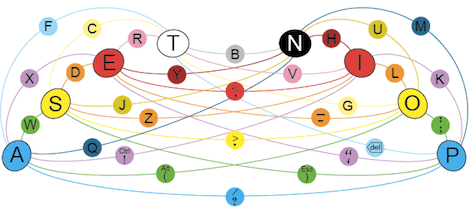

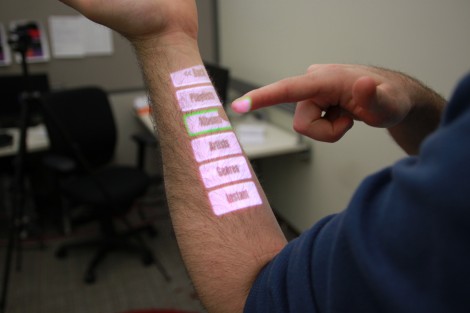

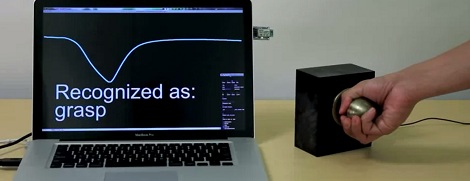

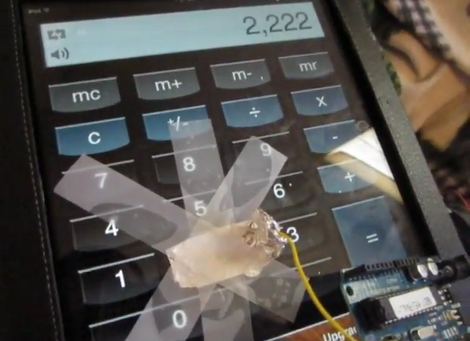

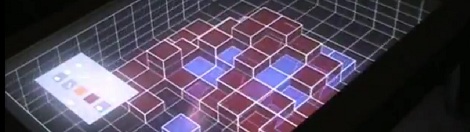

Instead of training a typist where to place their finger – the technique used in most other keyboard replacements, ASETNIOP trains the typist which fingers to press. For example, typing ‘H’ requires the typist to press the index and middle fingers of their right hand against the touchscreen. In addition to touchscreens, ASETNIOP can be used with projection systems, Nintendo Power Glove replicas, and extremely large touchpads that include repurposed nooks and Kindles.

If you’d like to try out ASENTNIOP, there’s a tutorial that allows you to try it out on a physical keyboard as well as one for the iPad. It’s a little weird to try out but surely no more difficult to learn than a Dvorak keyboard.