One of the first tools that is added to a toolbox when working on electronics, perhaps besides a multimeter, is a soldering iron. From there, soldering tools can be added as needed such as a hot air gun, reflow oven, soldering gun, or desoldering pump. But often a soldering iron is all that’s needed even for some specialized tasks as [Mr SolderFix] demonstrates.

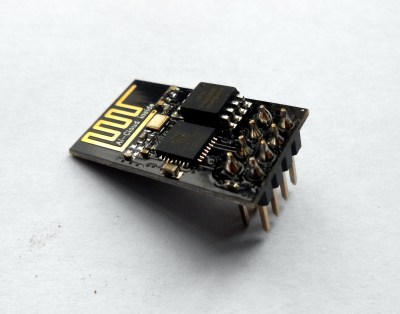

This specific technique involves removing a large connector from a PCB. Typically either a heat gun would be used, which might damage the PCB, or a tedious process involving a desoldering tool or braided wick might be tried. But with just a soldering iron, a few pieces of wire can be soldered around each of the pins to create a massive solder blob which connects all the pins of the connector to this wire. With everything connected to solder and wire, the soldering iron is simply pressed into this amalgamation and the connector will fall right out of the board, and the wire can simply be dropped away from the PCB along with most of the solder.

There is some cleanup work to do afterwards, especially removing excess solder in the holes in the PCB, but it’s nothing a little wick and effort can’t take care of. Compared to other methods which might require specialized tools or a lot more time, this is quite the technique to add to one’s soldering repertoire. For some more advanced desoldering techniques, take a look at this method for saving PCBs from some thermal stresses.

Continue reading “Finessing A Soldering Iron To Remove Large Connectors”