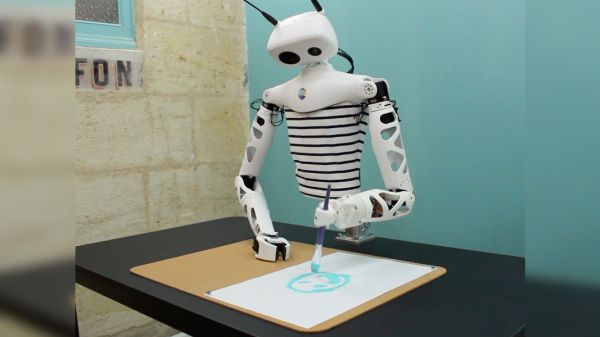

Humanoid robots always attract attention, but anyone who tries to build one quickly learns respect for a form factor we take for granted because we were born with it. Pollen Robotics wants to help move the field forward with Reachy: a robot platform available both as a product and as a wealth of information shared online.

This French team has released open source robots before. We’ve looked at their Poppy robot and see a strong family resemblance with Reachy. Poppy was a very ambitious design with both arms and legs, but it could only ever walk with assistance. In contrast Reachy focuses on just the upper body. One of the most interesting innovations is found in Reachy’s neck, a cleverly designed 3 DOF mechanism they called Orbita. Combined with two moving antennae at the top of the head, Reachy can emote a wide range of expressions despite not having much of a face. The remainder of Reachy’s joints are articulated with Dynamixel serial bus servos though we see an optional Orbita-based hand attachment in the demo video (embedded below).

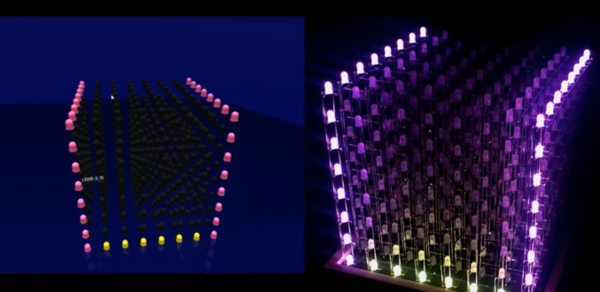

Reachy’s € 19,990 price tag may be affordable relative to industrial robots, but it’s pretty steep for the home hacker. No need to fret, those of us with smaller bank accounts can still join the fun because Pollen Robotics has open sourced a lot of Reachy details. Digging into this information, we see Reachy has a Google Coral for accelerating TensorFlow and a Raspberry Pi 4 for general computation. Mechanical designs are released via web-based Onshape CAD. Reachy’s software suite on GitHub is primarily focused on Python, which allows us to experiment within a Jupyter notebook. Simulation can be done within Unity 3D game engine, which can be optionally compiled to run in a browser like the simulation playground. But academic robotics researchers are not excluded from the fun, as ROS1 integration is also available though ROS2 support is still on the to-do list.

Reachy might not be as sophisticated as some humanoid designs we’ve seen, and without a lower body there’s no way for it to dance. But we are very appreciative of a company willing to share knowledge with the world. May it spark new ideas for the future.

[via Engadget]

Continue reading “Reachy The Open Source Robot Says Bonjour”

We’re not just talking about driving the LEDs themselves at a low level, but

We’re not just talking about driving the LEDs themselves at a low level, but