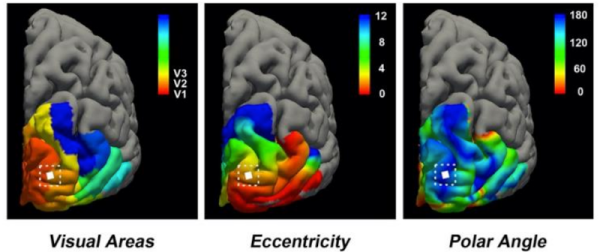

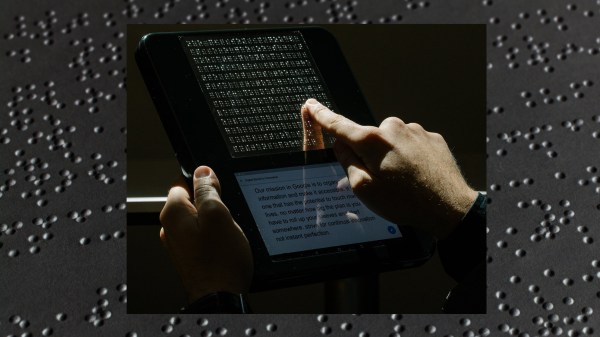

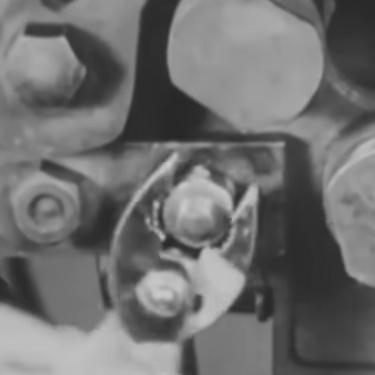

Nothing makes you appreciate your vision more than getting a little older and realizing that it used to be better and that it will probably get worse. But imagine how much more difficult it would be if you were totally blind. That was what happened to [Berna Gomez] when, at 42, she developed a medical condition that destroyed her optic nerves leaving her blind in a matter of days and ending her career as a science teacher. But thanks to science [Gomez] can now see, at least to some extent. She volunteered after 16 years to have a penny-sized device with 96 electrodes implanted in her visual cortex. The research is in the Journal of Clinical Investigation and while it is a crude first step, it shows lots of promise and uses some very novel techniques to overcome certain limitations.

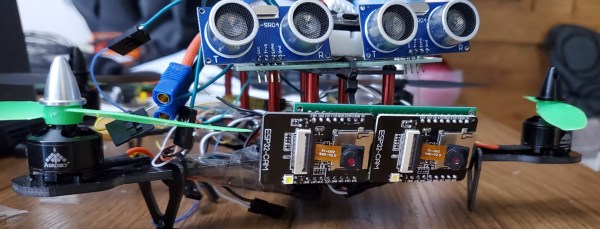

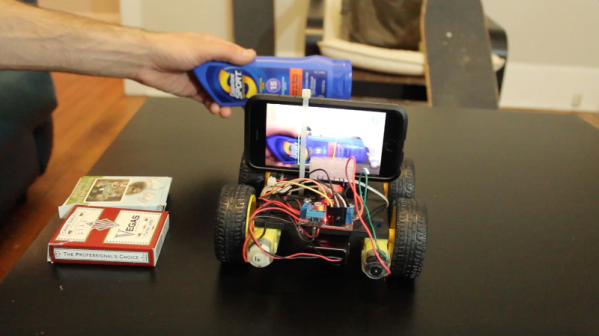

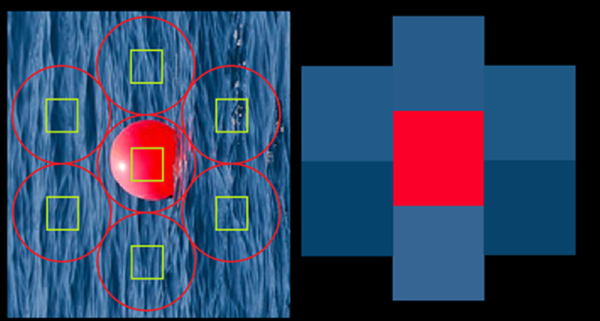

The 96 electrodes were in a 10×10 grid with the four corner electrodes missing. The resolution, of course, is lacking, but the project turned to a glasses-mounted camera to acquire images and process them, reducing them to signals for the electrodes that may not directly map to the image.

Continue reading “Brain Implant Offers Artificial Vision To The Blind”