We’ve seen a few of these builds before, but the build quality of [Mathieu]’s timeless fountain makes for an excellent display of mechanical skill showing off the wonder of blinking LEDs.

This timeless fountain is something we’ve seen before, and the idea is actually pretty simple: put some LEDs next to a dripping faucet, time the LEDs to the rate at which the droplets fall, and you get a stroboscopic effect that makes tiny droplets of water appear to hover in mid-air.

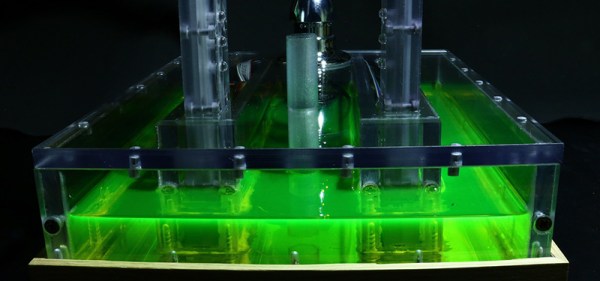

Like earlier builds, [Mathieu] is using UV LEDs and is coloring the water with fluorescein, a UV reactive dye. The LEDs are mounted on two towers, and at the top of the tower is a tiny, low power IR laser and photodiode. With the right code running on an ATxmega16A4, the lights blink in time with the falling water droplet, making it appear the drop is hovering in midair.

Blinking LEDs very, very quickly isn’t exactly hard. The biggest problem with this build was the mechanics. The frame of the machine was machined out of polycarbonate sheets and went together very easily. Getting a consistent drip from a faucet was a bit harder. It took about fifteen tries to get the design of the faucet nozzle right, but [Mathieu] eventually settled on a small output hole (about 0.5 mm) and a sharp nozzle angle of about 70 degrees.

[Mathieu] created a video of a few hovering balls of fluorescence. You can check that out below. It’s assuredly a lot cooler in real life without frame rate issues.

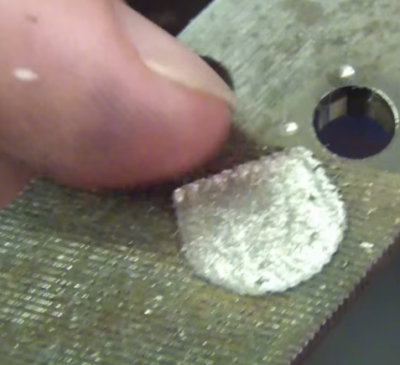

As you can imagine, a single spark won’t erode much metal. EDM machines fire tens of thousands of times per second. The exact frequencies, voltages, and currents are secrets the machine manufacturers keep close to their chests. [SuperUnknown] is zeroing in on 65 volts at 2 amps, running at 35 kHz. He’s made some great progress, gouging into hardened files, removing broken taps from brass, and even eroding the impression of a coin in steel.

As you can imagine, a single spark won’t erode much metal. EDM machines fire tens of thousands of times per second. The exact frequencies, voltages, and currents are secrets the machine manufacturers keep close to their chests. [SuperUnknown] is zeroing in on 65 volts at 2 amps, running at 35 kHz. He’s made some great progress, gouging into hardened files, removing broken taps from brass, and even eroding the impression of a coin in steel.

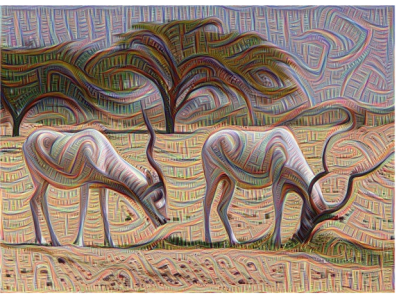

mind, however, is a simple question Google asked, and the resulting answer. To better understand the process, they wanted to know what was going on in the inner layers. They feed the network a picture of a truck, and out comes the word “truck”. But they didn’t know exactly how the network came to its conclusion. To answer this question, they showed the network an image, and then extracted what the network was seeing at different layers in the hierarchy. Sort of like putting a serial.print in your code to see what it’s doing.

mind, however, is a simple question Google asked, and the resulting answer. To better understand the process, they wanted to know what was going on in the inner layers. They feed the network a picture of a truck, and out comes the word “truck”. But they didn’t know exactly how the network came to its conclusion. To answer this question, they showed the network an image, and then extracted what the network was seeing at different layers in the hierarchy. Sort of like putting a serial.print in your code to see what it’s doing. This technique gives them the level of abstraction for different layers in the hierarchy and reveals its primitive understanding of the image. They call this process

This technique gives them the level of abstraction for different layers in the hierarchy and reveals its primitive understanding of the image. They call this process