Most robots get around with tracks or wheels, but [Dave] had something different in mind. Sufficiently unbothered by the prospect of mixing electronics and water, [Dave] augmented a canoe with twin, paddle-bearing robotic arms to bring to life a concept he had: the RowboBoat. The result? A canoe that can paddle itself with robotic arms, leaving the operator free to take a deep breath, sit back, and concentrate on not capsizing.

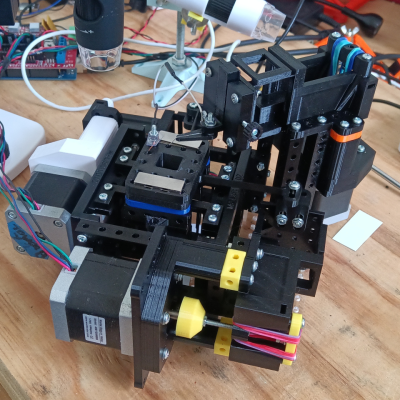

There are a couple of things we really like about this build, one of which is the tidiness of the robotic platform that non-destructively attaches to the canoe itself with custom brackets. A combination of aluminum extrusion and custom brackets, [Dave] designed it with the help of 3D scanning the canoe as a design aid. A canoe, after all, has nary a straight edge nor a right angle in sight. Being able to pull a 3D model into CAD helps immensely in such cases; we have also seen this technique used in refitting a van into an off-grid camper.

There are a couple of things we really like about this build, one of which is the tidiness of the robotic platform that non-destructively attaches to the canoe itself with custom brackets. A combination of aluminum extrusion and custom brackets, [Dave] designed it with the help of 3D scanning the canoe as a design aid. A canoe, after all, has nary a straight edge nor a right angle in sight. Being able to pull a 3D model into CAD helps immensely in such cases; we have also seen this technique used in refitting a van into an off-grid camper.

The other thing we like is the way that [Dave] drives the arms. The two PiPER robotic arms are driven with ROS, the Robot Operating System on a nearby Jetson Orin Nano SBC. The clever part is the way [Dave] observed that padding and steering a canoe has a lot in common with a differential drive, which is akin to how a tank works. And so, for propulsion, ROS simply treats the paddle-bearing arms as though they were wheels in a differential drive. The arms don’t seem to mind a little water, and the rest of the electronics are protected by a pair of firmly-crossed fingers.

The canoe steers by joystick, but being driven by ROS it could be made autonomous with a little more work. [Dave] has his configuration and code for RowboBoat up on GitHub should anyone wish to take a closer look. Watch it in action in the video, embedded below.