The 3D printing revolution is upon us and the technologies associated with these machines is evolving every day. Stereolithography or SLA printers are becoming the go-to printer for high-resolution prints that just can’t be fabricated on a filament-based machine. ADAM DLP 3D printer project is [adambrx]’s entry into the Hackaday Prize and the first step in his quest for higher quality prints on a DIY budget.

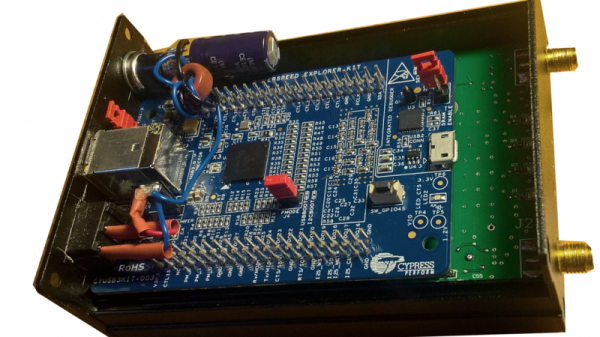

[adambrx]’s current iteration employs a Raspberry Pi 3 and a UV DLP Projector, all enclosed in a custom frame assembly. The logs show the evolution of the printer from an Acer DLP to the current UV DLP Light Engine. The results are quite impressive for a DIY project, and [adambrx] has put up images of 50-micrometer pillars and some nifty other prints which show the amount of work that has been put into the project.

It is safe to say that [adambrax] has outspent the average entry to the Hackaday Prize with over €5000 spent in around 3 years. Can [adambrx] can keep this one true to its DIY roots is yet to be seen, however, it is clear that this project has potential. We would love to see a high-resolution SLA printer that does not cost and arm and a leg.