Whilst swapping out the stereo in his car for a more modern Android based solution, [Aaron] noticed that it only utilised a single CAN differential pair to communicate with the car as opposed to a whole bundle of wires employing analogue signalling. This is no surprise, as modern cars invariably use the CAN bus to establish communication between various peripherals and sensors.

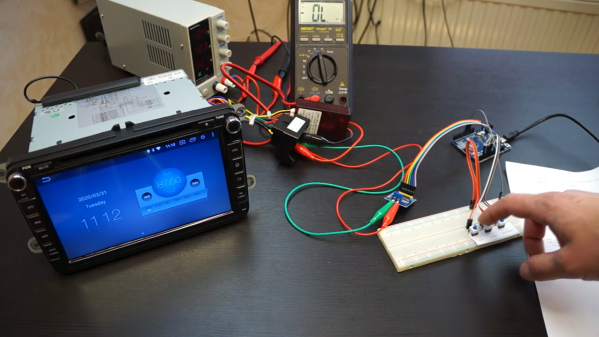

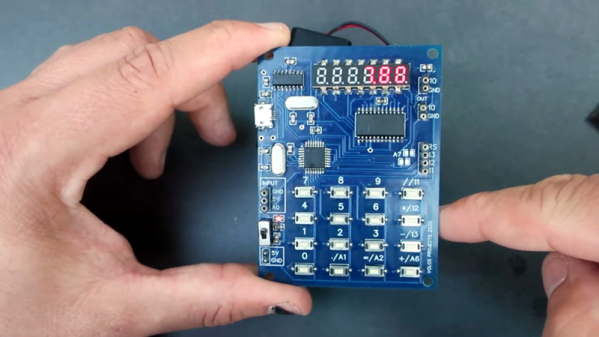

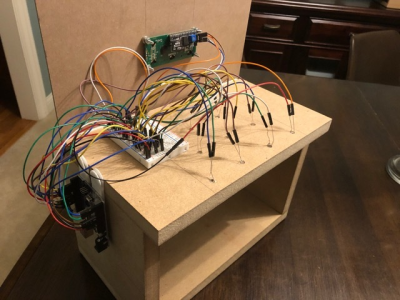

In a series of videos, [Aaron] details how he used this opportunity to explore some of the nitty-gritty of CAN communication. In Part 1 he designs a cheap, custom CAN bus sniffer using an Arduino, a MCP2515 CAN controller and a CAN bus driver IC, demonstrating how this relatively simple hardware arrangement could be used along with open source software to decode some real CAN bus traffic. Part 2 of his series revolves around duping his Android stereo into various operational modes by sending the correct CAN packets.

These videos are a great way to learn some of the basic considerations associated with the various abstraction layers typically attributed to CAN. Once you’ve covered these, you can do some pretty interesting stuff, such as these dubious devices pulling a man-in-the-middle attack on your odometer! In the meantime, we would love to see a Part 3 on CAN hardware message filtering and masks [Aaron]!

Continue reading “Custom Packet Sniffer Is A Great Way To Learn CAN”