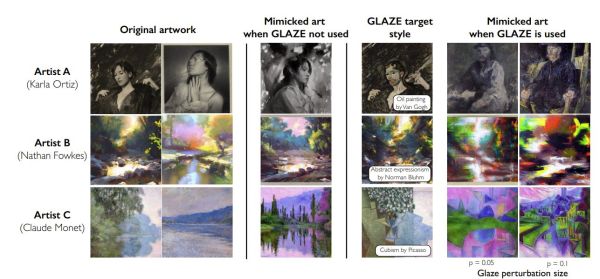

You might have heard about LLaMa or maybe you haven’t. Either way, what’s the big deal? It’s just some AI thing. In a nutshell, LLaMa is important because it allows you to run large language models (LLM) like GPT-3 on commodity hardware. In many ways, this is a bit like Stable Diffusion, which similarly allowed normal folks to run image generation models on their own hardware with access to the underlying source code. We’ve discussed why Stable Diffusion matters and even talked about how it works.

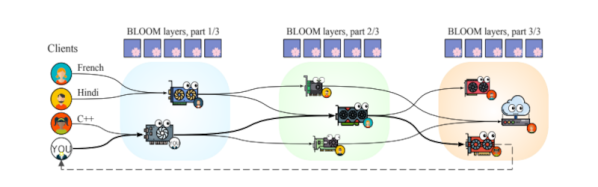

LLaMa is a transformer language model from Facebook/Meta research, which is a collection of large models from 7 billion to 65 billion parameters trained on publicly available datasets. Their research paper showed that the 13B version outperformed GPT-3 in most benchmarks and LLama-65B is right up there with the best of them. LLaMa was unique as inference could be run on a single GPU due to some optimizations made to the transformer itself and the model being about 10x smaller. While Meta recommended that users have at least 10 GB of VRAM to run inference on the larger models, that’s a huge step from the 80 GB A100 cards that often run these models.

While this was an important step forward for the research community, it became a huge one for the hacker community when [Georgi Gerganov] rolled in. He released llama.cpp on GitHub, which runs the inference of a LLaMa model with 4-bit quantization. His code was focused on running LLaMa-7B on your Macbook, but we’ve seen versions running on smartphones and Raspberry Pis. There’s even a version written in Rust! A rough rule of thumb is anything with more than 4 GB of RAM can run LLaMa. Model weights are available through Meta with some rather strict terms, but they’ve been leaked online and can be found even in a pull request on the GitHub repo itself. Continue reading “Why LLaMa Is A Big Deal”