[Aykut Çelik] uses some strong words to describe how he feels about his VW Polo’s current radio set-up. Words like, “useless,” are bandied about. What is a modern man supposed to do with a car that doesn’t have built-in navigation or Bluetooth connectivity with phones? Listen to the radio? There are actual (mostly) self driving cars on the road now. No, [Aykut] moves forward, not backwards.

To fix this horrendous shortcoming in his car’s feature package, he set out to install a tablet in the dash. His blog write-up undersells the amount of work that went into the project, but the video after the break rectifies this misunderstanding. He begins by covering the back of a face-down Samsung tablet with a large sheet of plastic film. Next he lays a sheet of fiberglass over the tablet and paints it with epoxy until it has satisfactorily clung to the back of the casing. Afterwards comes quite a bit of work fitting an off-the-shelf panel display mount to the non-standard hardware. He eventually takes it to a local shop which does the final fitting on the contraption.

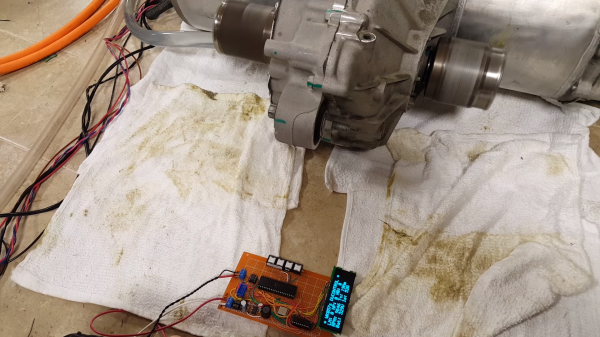

The electronics are a hodgepodge of needed parts: An amplifier, to replace the one that was attached to the useless husk of the prior radio set; a CAN shield for an Arduino, so that he could still use the steering wheel buttons; and a Bluetooth shield, so that the Arduino could talk to the tablet. Quite a bit of hacking happened, and the resulting software is on GitHub.

The final assembly went together well. While it’s no Tesla console. It does get over the air updates whenever he feels like writing them. [Aykut] moves forward with the times.

Continue reading “Fight That Tesla Envy With A Tablet Dash For Your Car” →