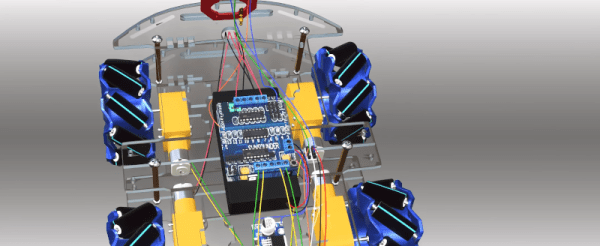

If you’ve dealt with robots or other wheeled projects, you’ve probably heard of mecanum wheels. These seemingly magic wheels have the ability to move in any direction. If you’ve ever seen one, it is pretty obvious how it works. They look more or less like ordinary wheels, but they also have rollers that rotate off-axis by 45 degrees from the normal movement axis. This causes the wheel’s driving force to move at a 45 degree angle. However, there are a lot of details that aren’t apparent from a quick glance. Why are the rollers tapered? How do you control a vehicle using these wheels? [Lesics] has a good explanation of how the wheels work in a recent video that you can see below.

With four wheels, you can have a pair of wheels — one at the front right and one at the back left — that have a net force vector of +45 degrees. Then the other pair of wheels can be built differently to have a net force vector of -45 degrees. The video shows how moving some or all wheels in different directions can move the vehicle in many different directions.