When flying drones in and around structures, the size of the drone is generally limited by the openings you want to fit through. Researchers at the University of Tokyo got around this problem by using an articulating structure for the drone frame, allowing the drone to transform from a large square to a narrow, elongated form to fit through smaller gaps.

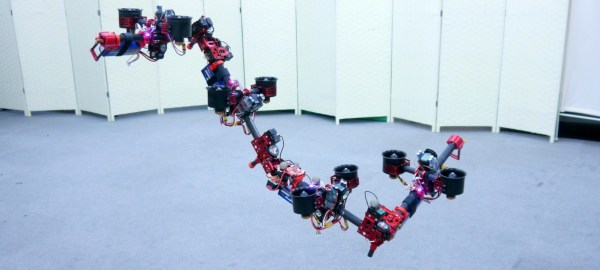

The drone is called DRAGON, which is somehow an acronym for the tongue twisting description “Dual-Rotor Embedded Multilink Robot with the Ability of Multi-Degree-of-Freedom Aerial Transformation“. The drone consists of four segments, with a 2-DOF actuated joint between each segment. A pair of ducted fan motors are attached to the middle of each segment with a 2-DOF gimbal that allows it to direct thrust in any direction relative to the segment. For normal flight the segments would be arranged in the square shape, with minimal movement between the segments. When a small gap is encountered, as demonstrated in the video after the break, the segments rearrange into a dragon-like shape, that can pass through a gap in any plane.

Each segment has its own power source and controller, and the control software required to make everything work together is rather complex. The full research paper is unfortunately behind a paywall. The small diameter of the propellers, and all the added components would be a severe limiting factor in terms of lifting capacity and flight time, but the concept is to definitely interesting.

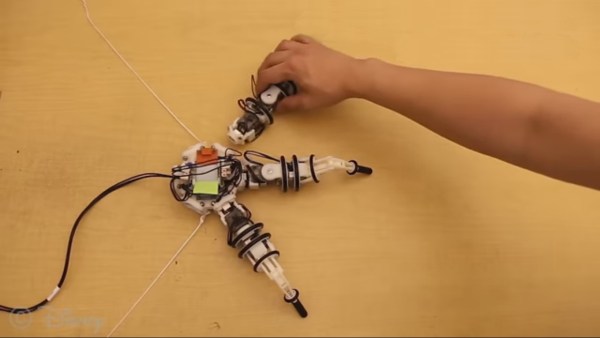

The idea of shape shifting robots has been around for a while, and can become even more interesting when the different segment can detach and reattach themselves to become modular robots. The 2016 Hackaday Grand Prize winner DTTO is a perfect example of this, although it did lack the ability to fly. Continue reading “Transforming Drone Can Be A Square Or A Dragon”

In this age of

In this age of