Tamagotchi’s relatively simple technical complexity pales in comparison to its huge cultural impact, with over 76 million sold. It has spawned comics, stories, numerous toys, and offshoots such as an anime and two films. [JC] was looking through some of his old stuff and came across a Tamagotchi P1 (the original Tamagotchi) and decided to create a portable emulator for it. The ROM for the P1 has long been dumped and can be run within a MAME emulator. After all, it’s just an E0C6S46 Epson MCU, 32×16 LCD with 8 additional icons, three buttons, and a piezo. The manual for the MCU is even available on Epson’s website. Here at Hackaday, we’ve seen Tamagotchis many times before, such as the infinite matrix of the Tamagotchi Singularity and a ROM dump of the latest generation of Tamagotchi based on a 6502 core.

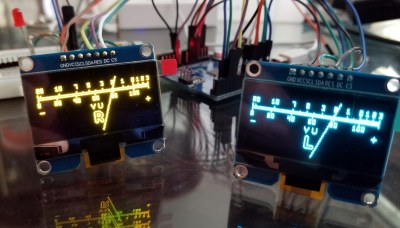

So what’s different about what [JC] is trying to accomplish? For starters, the tooling. It is divided into two parts: TamaLIB and TamaTool. The first is a hardware-agnostic P1 emulation library that relies on a HAL layer to communicate with the hardware. The second is a frontend for the first, allowing debugging, RAM editing, and modifications to the ROM. In particular, it supports easy modification of images within the ROM and allows for custom eggs and Tamagotchis. The homage to the Jolly Wrencher is nice.

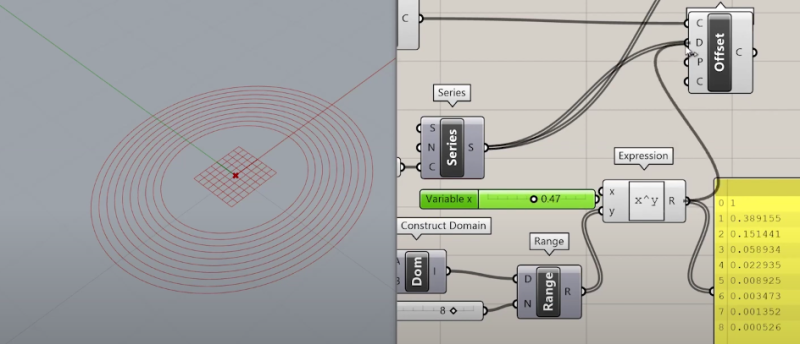

Given that the emulation is platform-agnostic and access to a low-resolution timer is not guaranteed, cycle counts become tricky. The rather clever solution [JC] stumbled upon was synchronizing against input polling, screen updates, and sound output. TamaLIb keeps track of how many CPU cycles have passed and regularly checks if the emulation is going too fast or too slow. Slowing down or speeding up the simulation allows it to seem to run in real-time.

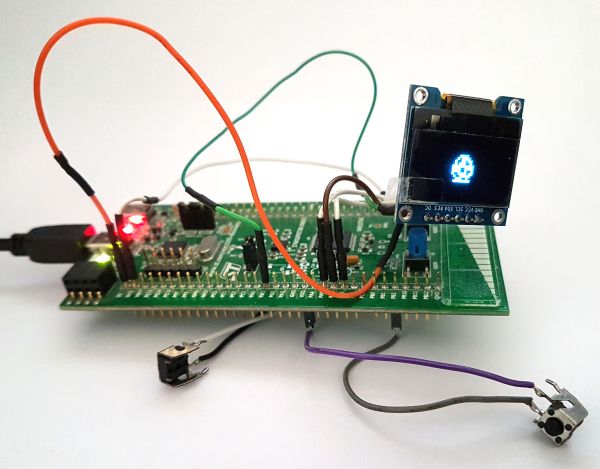

The last goal [JC] had was to run it on embedded hardware. Using an STM32F072 board and a cheap OLED screen had a portable emulated Tamagotchi known as MCUGotchi. The code is available on GitHub and should work on most STM32 MCUs with a few small tweaks. Now that someone has gone through the effort to make it easy to run a Tamagotchi literally anywhere, it might not be long until we see a coffee maker or a smart light acting as a Tamagotchi. Perhaps the new joke will be, can it run Tamagotchi?

Video after the break.