When we consume our music online via streaming services it is easy to forget the days of recordings being contained on physical media, and to overlook the plethora of competing formats that vied for space in our hi-fi systems to play them. [Andrew Rossignol] has an eye for dated recording media formats as a chiptune enthusiast though, because not only has he found a DAT machine from the 1990s, he’s hacked it to record HD video rather than hi-fi audio.

If you’ve not encountered DAT before, it’s best to consider the format as the equivalent of a CD player but on a tape cassette. It had its roots in the 1980s, and stored an uncompressed 16-bit CD-quality stereo audio data stream on the tape using a helical-scan mechanism similar to that found in a video cassette recorder. It was extremely expensive due to the complexity of the equipment, the music industry hated it because they thought it would be used to make pirate copies of CDs. But despite those hurdles it established a niche for itself among well-heeled musicians and audiophiles. If any Hackaday readers have encountered a DAT cassette it is most likely to have not contained audio at all but computer data, it was common in the 1990s for servers to use DAT tapes for backup purposes.

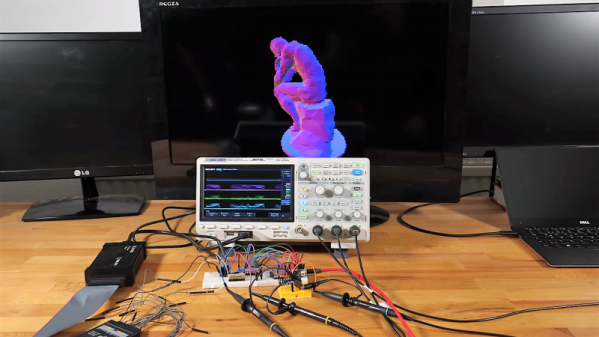

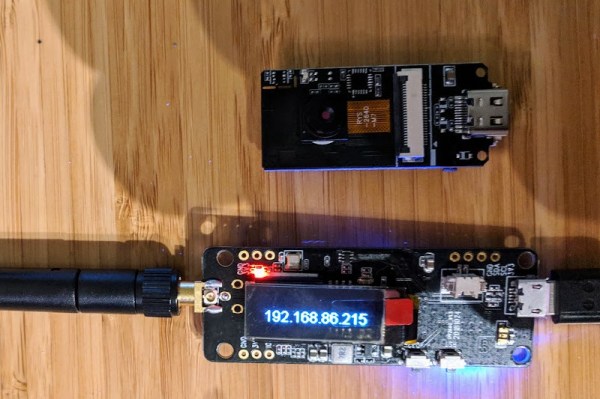

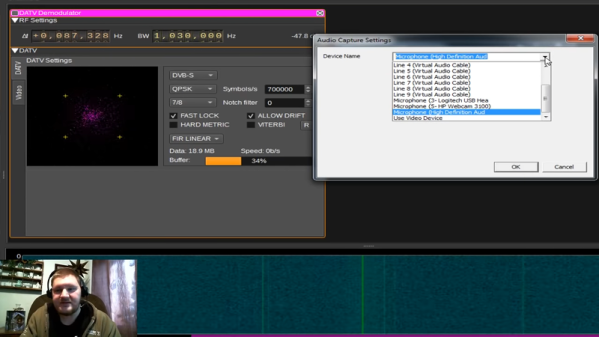

[Andrew]’s hack involves using the SPDIF digital interface on his Sony DAT player to carry compressed video data. SPDIF is a mature and well-understood standard that he calculated has a bandwidth of 187.5 kB/s, plenty to carry HD video using the H.265 compression scheme. The SPDIF data is brought into the computer via a USB sound card, and from there his software could either stream or retrieve the video. The stream is encoded into frames following the RFC1662 format to ensure synchronization, and he demonstrates it in the video below with a full explanation.

Continue reading “DAT, The HD Video Tape Format We Never Knew We Had”