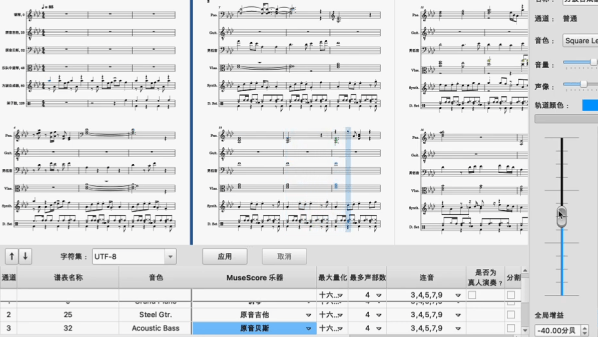

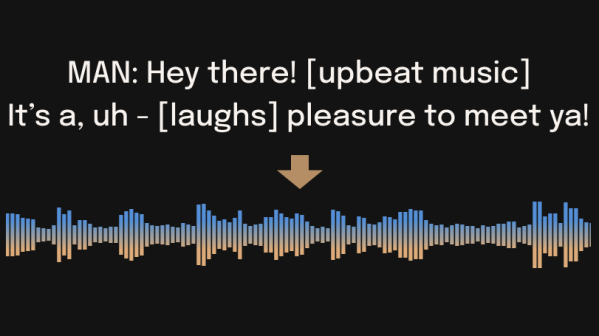

Music generation guided by machine learning can make great projects, but there’s not usually much apparent control over the results. The system makes what it makes, and it’s an achievement if the results are not obvious cacophony. But that’s all different with GETMusic which allows for a much more involved approach because it understands and is able to create music by tracks. Among other things, this means one can generate a basic rhythm and melody first, then add additional elements to those existing ones, leaving the previous elements unchanged.

GETMusic can make music from scratch, or guided from examples, and under the hood uses a diffusion-based approach similar to the method behind AI image generators like Stable Diffusion. We’ve previously covered how Stable Diffusion works, but instead of images the same basic principles are used to guide the model from random noise to useful tracks of music.

GETMusic can make music from scratch, or guided from examples, and under the hood uses a diffusion-based approach similar to the method behind AI image generators like Stable Diffusion. We’ve previously covered how Stable Diffusion works, but instead of images the same basic principles are used to guide the model from random noise to useful tracks of music.

Just a few years ago we saw a neural network trained to generate Bach, and while it was capable of moments of brilliance, it didn’t produce uniformly-listenable output. GETMusic is on an entirely different level. The model and code are available online and there is a research paper to accompany it.

You can watch a video putting it through its paces just below the page break, and there are more videos on the project summary page.

Continue reading “GETMusic Uses Machine Learning To Generate Music, Understands Tracks”