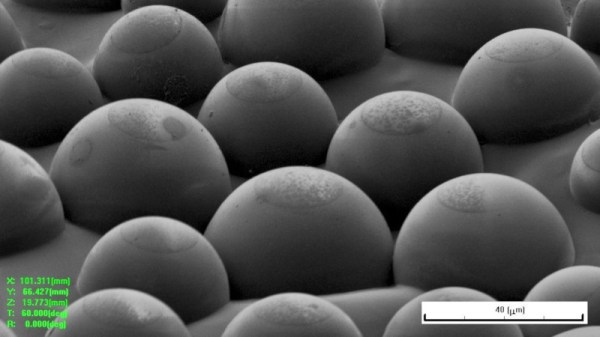

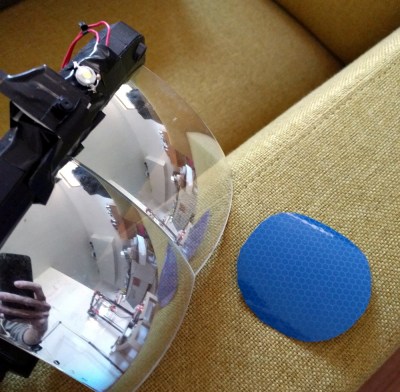

Retroreflectors are interesting materials, so known for their nature of reflecting light back to its source. Examples include street signs, bicycle reflectors, and cat’s eyes, which so hauntingly pierce the night. They’re also used in the Tilt Five tabletop AR system, for holographic gaming. [Adam McCombs] got his hands on a Tilt Five gameboard, and threw it under the microscope to see how it works.

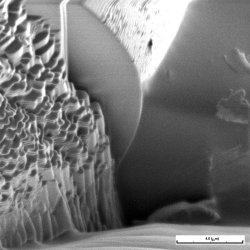

[Adam] isn’t mucking around, fielding a focused ion beam microscope for the investigation. This scans a beam of galium metal ions across a sample for imaging. With the added kinetic energy of an ion beam versus a more typical electron beam, the sample under the microscope can be ablated as well as imaged. This allows [Adam] to very finally chip away at the surface of the retroreflector to see how it’s made.

The analysis reveals that the retroreflecting spheres are glass, coated in metal. They’re stuck to a surface with an adhesive, which coats the bottom of the spheres, and acts as an etch mask. The metal coating is then removed from the sphere’s surface sticking out above the adhesive layer. This allows light to enter through the transparent part of the sphere, and then bounce off the metal coating back to the source, creating a sheet covered in retroreflectors.

[Adam] does a great job of describing both the microscopy and production techniques involved, before relating it to the fundamentals of the Tilt Five AR technology. It’s not the first time we’ve heard from [Adam] on the topic, and we’re sure it won’t be the last!

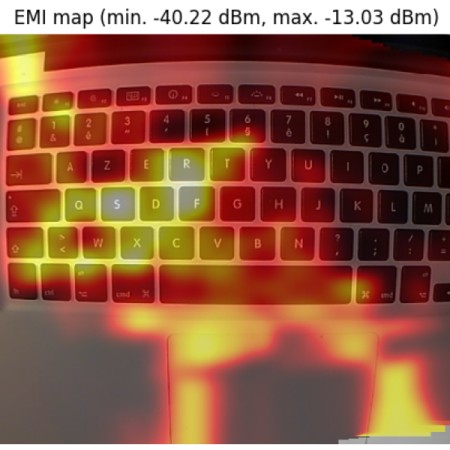

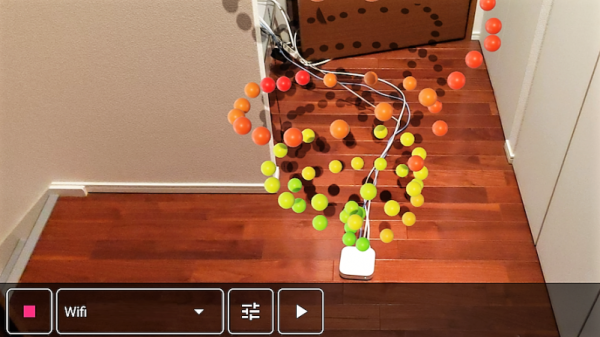

Unwilling to go full [Geordi La Forge] to be able to visualize RF, [Ken Kawamoto] built the next best thing –

Unwilling to go full [Geordi La Forge] to be able to visualize RF, [Ken Kawamoto] built the next best thing –

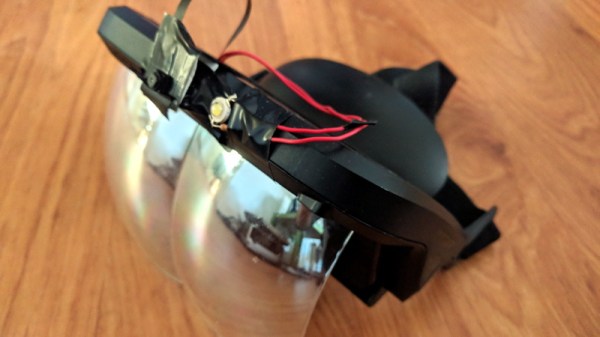

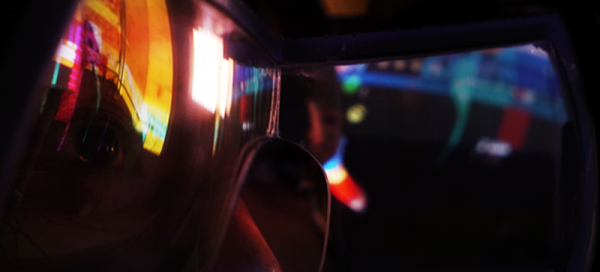

A serious setback to the aspiring AR hacker has been the fact that while the design is open, the lenses absolutely are not off the shelf components. [Smart Prototyping] aims to change that, and recently announced in a blog post

A serious setback to the aspiring AR hacker has been the fact that while the design is open, the lenses absolutely are not off the shelf components. [Smart Prototyping] aims to change that, and recently announced in a blog post