[Vlad] wrote in to tell us about his latest project—an RC boat that autonomously navigates between waypoints. Building an autonomous vehicle seems like a really complicated project, but [Vlad]’s build shows how you can make a simple waypoint-following vehicle without a background in autonomy and control systems. His design is inspired by the Scout autonomous vehicle that we’ve covered before.

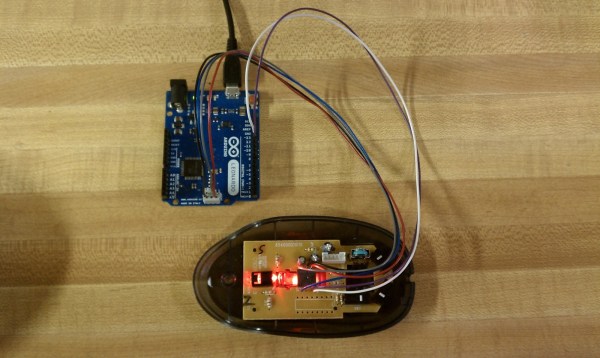

[Vlad] started prototyping with an Arduino, a GPS module, and a digital compass. He wrote a quick sketch that uses the compass and GPS readings to control a servo that steers towards a waypoint. [Vlad] took his prototype outside and walked around to make sure that steering and navigation were working correctly before putting it in a boat. After a bit of tweaking, his controller steered correctly and advanced to the next waypoint after the GPS position was within 5 meters of its goal.

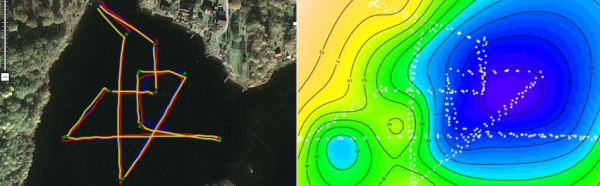

Next [Vlad] took to the water. His first attempt was a home-built airboat, which looked awesome but unfortunately didn’t work very well. Finally he ended up buying a $20 boat off of eBay and made a MOSFET-based motor controller to drive its dual thrusters. This design worked much better and after a bit of PID tuning, the boat was autonomously navigating between waypoints in the water. In the future [Vlad] plans to use the skills he learned on this project to make an autopilot for the 38-foot catamaran his dad is building (an awesome project by itself!). Watch the video after the break for more details and to see the boat in action.

Next [Vlad] took to the water. His first attempt was a home-built airboat, which looked awesome but unfortunately didn’t work very well. Finally he ended up buying a $20 boat off of eBay and made a MOSFET-based motor controller to drive its dual thrusters. This design worked much better and after a bit of PID tuning, the boat was autonomously navigating between waypoints in the water. In the future [Vlad] plans to use the skills he learned on this project to make an autopilot for the 38-foot catamaran his dad is building (an awesome project by itself!). Watch the video after the break for more details and to see the boat in action.

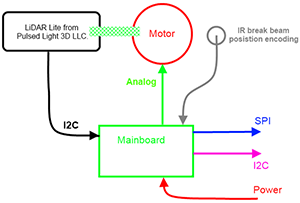

[Patrick] has spent a lot of time around ground and aerial based autonomous robots, and over the last few years, he’s noticed a particular need for teams in robotics competitions to break through the ‘sensory bottleneck’ and get good data of the surrounding environment for navigational algorithms. The most well-funded teams in autonomous robotics competitions use LIDARs to scan the environment, but these are astonishingly expensive. With that, [Patrick] set out to create a cheaper solution.

[Patrick] has spent a lot of time around ground and aerial based autonomous robots, and over the last few years, he’s noticed a particular need for teams in robotics competitions to break through the ‘sensory bottleneck’ and get good data of the surrounding environment for navigational algorithms. The most well-funded teams in autonomous robotics competitions use LIDARs to scan the environment, but these are astonishingly expensive. With that, [Patrick] set out to create a cheaper solution.