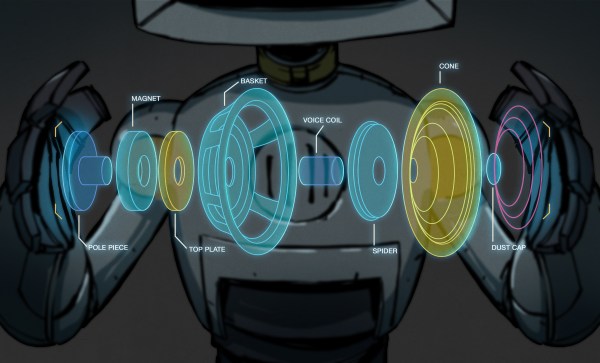

In previous episodes of this long-running series looking at the world of high-quality audio, at every point we’ve stayed in the real world of physical audio hardware. From the human ear to the loudspeaker, from the DAC to measuring distortion, this is all stuff that can happen on your bench or in your Hi-Fi rack.

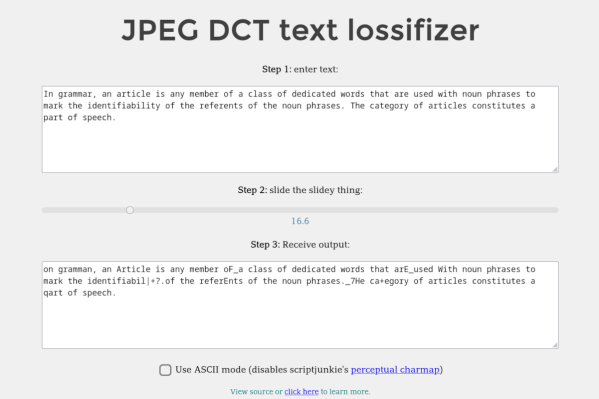

We’re now going for the first time to diverge from the practical world of hardware into the theoretical world of mathematics, as we consider a very contentious topic in the world of audio. We live in a world in which it is now normal for audio to have some form of digital compression applied to it, some of which has an effect on what is played back through our speakers and headphones. When a compression algorithm changes what we hear, it’s distortion in audio terms, but how much is it distorted and how do we even measure that? It’s time to dive in and play with some audio files. Continue reading “Know Audio: Lossy Compression Algorithms And Distortion”