A mind is a terrible thing to waste – but an awesome thing to hack. We last visited brain hacks back in July of 2015. Things happen fast on Hackaday.io. Miss a couple of days, and you’ll miss a bunch of great new projects, including some awesome new biotech hacks. This week, we’re checking out some of the best new mind and brain hacks on Hackaday.io

We start with [Daniel Felipe Valencia V] and Brainmotic. Brainmotic is [Daniel’s] entry in the 2016 Hackaday Prize. Smart homes and the Internet of Things are huge buzzwords these days. [Daniel’s] project aims to meld this technology with electroencephalogram (EEG). Your mind will be able to control your home. This would be great for anyone, but it’s especially important for the handicapped. Brainmotic’s interface is using the open hardware OpenBCI as the brain interface. [Daniel’s] software and hardware will create a bridge between this interface and the user’s home.

We start with [Daniel Felipe Valencia V] and Brainmotic. Brainmotic is [Daniel’s] entry in the 2016 Hackaday Prize. Smart homes and the Internet of Things are huge buzzwords these days. [Daniel’s] project aims to meld this technology with electroencephalogram (EEG). Your mind will be able to control your home. This would be great for anyone, but it’s especially important for the handicapped. Brainmotic’s interface is using the open hardware OpenBCI as the brain interface. [Daniel’s] software and hardware will create a bridge between this interface and the user’s home.

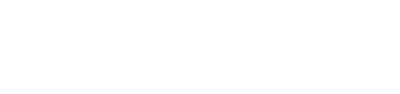

Next we have [Angeliki Beyko] with Serial / Wireless Brainwave Biofeedback. EEG used to be very expensive to implement. Things have gotten cheap enough that we now have brain controlled toys on the market. [Angeliki] is hacking these toys into useful biofeedback tools. These tools can be used to visualize, and even control the user’s state of mind. [Angeliki’s] weapon of choice is the MindFlex series of toys. With the help of a PunchThrouch LightBlue Bean she was able to get the EEG headsets talking on Bluetooth. A bit of fancy software on the PC side allows the brainwave signals relieved by the MindFlex to be interpreted as simple graphs. [Angeliki] even went on to create a Mind-Controlled Robotic Xylophone based on this project.

Next we have [Angeliki Beyko] with Serial / Wireless Brainwave Biofeedback. EEG used to be very expensive to implement. Things have gotten cheap enough that we now have brain controlled toys on the market. [Angeliki] is hacking these toys into useful biofeedback tools. These tools can be used to visualize, and even control the user’s state of mind. [Angeliki’s] weapon of choice is the MindFlex series of toys. With the help of a PunchThrouch LightBlue Bean she was able to get the EEG headsets talking on Bluetooth. A bit of fancy software on the PC side allows the brainwave signals relieved by the MindFlex to be interpreted as simple graphs. [Angeliki] even went on to create a Mind-Controlled Robotic Xylophone based on this project.

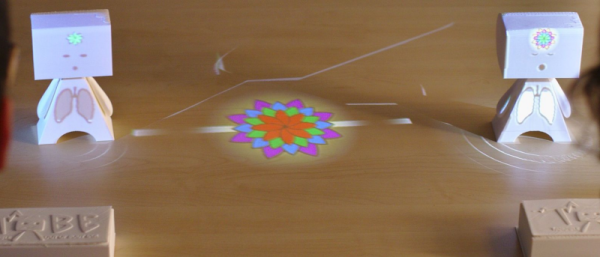

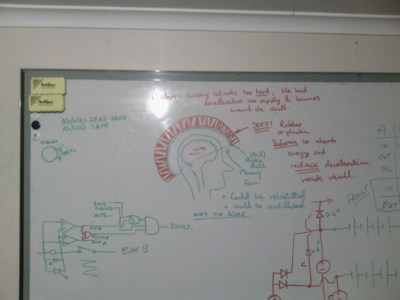

Next is [Stuart Longland] who hopes to protect brains with Improved Helmets. Traumatic Brain Injury (TBI) is in the spotlight of medical technology these days. As bad as it may be, TBI is just one of several types of head and neck injuries one may sustain when in a bicycle or motorcycle accident. Technology exists to reduce injury, and is included with some new helmets. Many of these technologies, such as MIPS, are patented. [Stuart] is working to create a more accurate model of the head within the helmet, and the brain within the skull. From this data he intends to create a license free protection system which can be used with new helmets as well as retrofitted to existing hardware.

Next is [Stuart Longland] who hopes to protect brains with Improved Helmets. Traumatic Brain Injury (TBI) is in the spotlight of medical technology these days. As bad as it may be, TBI is just one of several types of head and neck injuries one may sustain when in a bicycle or motorcycle accident. Technology exists to reduce injury, and is included with some new helmets. Many of these technologies, such as MIPS, are patented. [Stuart] is working to create a more accurate model of the head within the helmet, and the brain within the skull. From this data he intends to create a license free protection system which can be used with new helmets as well as retrofitted to existing hardware.

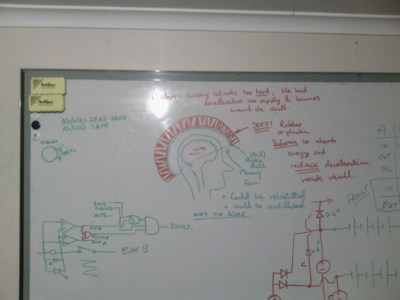

Finally we have [Tom Meehan], whose entry in the 2016 Hackaday Prize is Train Your Brain with Neurofeedback. [Tom] is hoping to improve quality of life for people suffering from Epilepsy, Autism, ADHD, and other conditions with the use of neurofeedback. Like [Angeliki ] up above, [Tom] is hacking hardware from NeuroSky. In this case it’s the MindWave headset. [Tom’s] current goal is to pull data from the TAGM1 board inside the MindWave. Once he obtains EEG data, a Java application running on the PC side will allow him to display users EEG information. This is a brand new project with updates coming quickly – so it’s definitely one to watch!

Finally we have [Tom Meehan], whose entry in the 2016 Hackaday Prize is Train Your Brain with Neurofeedback. [Tom] is hoping to improve quality of life for people suffering from Epilepsy, Autism, ADHD, and other conditions with the use of neurofeedback. Like [Angeliki ] up above, [Tom] is hacking hardware from NeuroSky. In this case it’s the MindWave headset. [Tom’s] current goal is to pull data from the TAGM1 board inside the MindWave. Once he obtains EEG data, a Java application running on the PC side will allow him to display users EEG information. This is a brand new project with updates coming quickly – so it’s definitely one to watch!

If you want more mind hacking goodness, check out our freshly updated brain hacking project list! Did I miss your project? Don’t be shy, just drop me a message on Hackaday.io. That’s it for this week’s Hacklet, As always, see you next week. Same hack time, same hack channel, bringing you the best of Hackaday.io!