Immersive audio is the new hotness in the recording world. Once upon a time, mono was good enough. Then someone realized humans have two ears, and everyone wanted stereo. For most of us, that’s where it stopped, but audio connoisseurs kept going into increasingly baroque surround-sound setups — ending in Immersive Audio, audio that is meant to fully reproduce the three-dimensional soundscape of the world around us. [DJJules] is one of those audio connoisseurs, and to share the joy of immersive audio recording with the rest of us, he’s developed Maurice, a compact, low-cost immersive microphone.

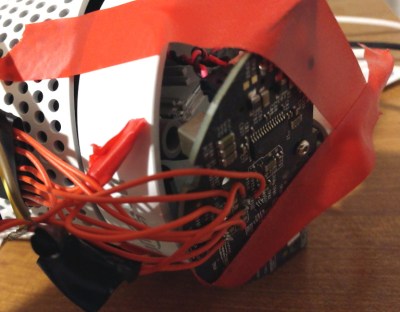

Maurice is technically speaking, a symmetrical ORTF3D microphone array. OTRF is not a descriptive acronym; it stands for Office de Radiodiffusion Télévision Française, the fine people who developed this type of microphone for stereo use. The typical stereo ORTF setup requires two cardioid microphones and angles them 110 degrees apart at a distance of 17 cm. Maurice arrays four such pairs, all oriented vertically and facing 90 degrees from one another for fully immersive, 8-channel sound. All of those microphones are thus arrayed to capture sound omnidirectionally, and give good separation between the channels for later reproduction. The mountings are all 3D printed, and [DJJules] kindly provides STLs.

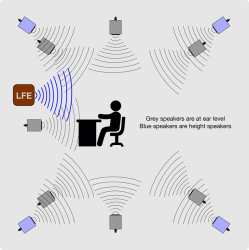

Recording eight audio channels simultaneously is not trivial for the uninitiated, but fortunately, [DJJules] includes a how-to in his post. We particularly like his tip to use resistor color coding to identify the XLR cables for different microphone channels. Playback, too, requires special setup and processing. [DJJules] talks about listening on his 7.1.4 stereo setup, which you can find in a companion post. That’s a lot of speakers, as you might imagine.

There are high-end headphones that claim to reproduce an immersive sound field as well, but we can’t help but wonder if you’d miss the “true” experience without head tracking. Even with regular department-store headphones, the demo recordings linked via the Instructable sound great, but that probably just reflects the quality of the individual microphones.

Audio can be a make-or-break addition to VR experiences, so that would seem to be an ideal use case for this sort of technology. Maurice isn’t the only way to get there; we previously focused on [DJJules]’s ambisonic microphone, which is another way to reproduce a soundscape. What do you think, is this “immersive audio” the new frontier of Hi-Fi, or do we call it a stereo for a reason? Discuss in the comments!