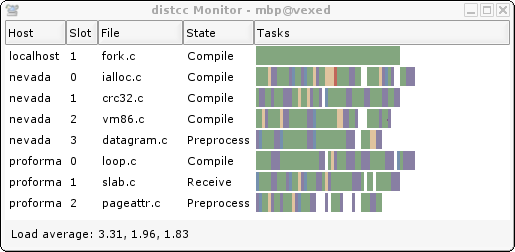

The motto of Sun Microsystems back in the day was “The Network Is The Computer” which might be kind of relevant when CPUs were slower and single-core affairs, but lately to get a faster compile, you’d simply throw more cores and memory at the problem. The thing is, most of us don’t do huge compilations all that often, we can’t remember the last time we even attempted a Linux kernel build. However if you do find yourself with a sudden need to do so, and have access to a pile of machines hooked to a network, then why not check out distcc: the fast distributed C/C++ Compiler? We’ve seen a few mentions in comments and a HaD links article referencing it, but never explicitly covered the tool. So here we go.

Continue reading “Accelerate Your Large Builds Locally With Distcc”