Every December and May the senior design projects from engineering schools start to roll in. Since the students aren’t yet encumbered with real-world detractors (like management) the projects are often exceptional, unique, and solve problems we never even thought we had. Such is the case with [Mark] and [Peter]’s senior design project: a pick and place machine that promises to solve all of life’s problems.

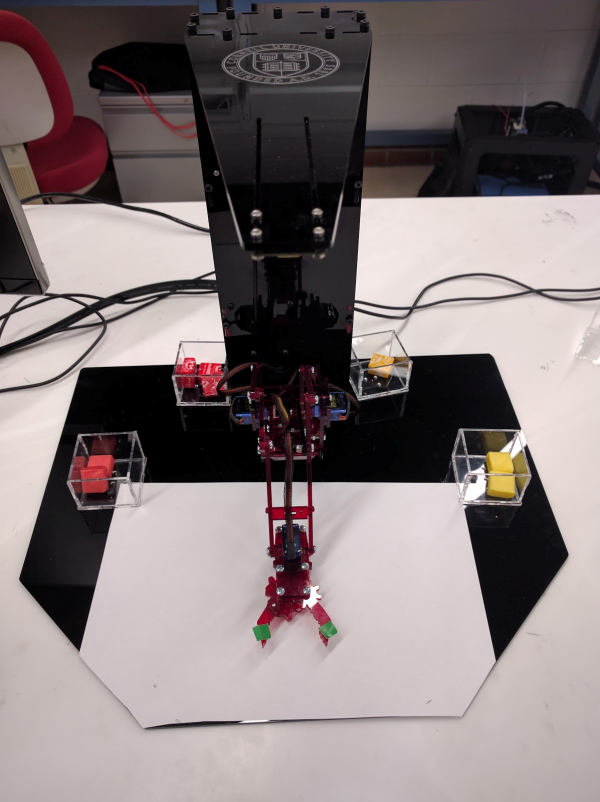

Of course we’ve seen pick-and-place machines before, but this one is different. Rather than identifying resistors and capacitors to set on a PCB, this machine is able to identify and sort candies. The robot — a version of the MeARM — has three degrees of freedom and a computer vision system to alert the arm as to what it’s picking up and where it should place it. A Raspberry Pi handles the computer vision and feeds data to a PIC32 which interfaces with the hardware.

One of the requirements for the senior design class was to keep the budget under $100, which they were able to accomplish using pre-built solutions wherever possible. Robot arms with dependable precision can’t even come close to that price restraint. But this project overcomes the lack of precision in the MeArm by using incremental correcting steps to reach proper alignment. This is covered in the video demo below.

Senior design classes are a great way to teach students how to integrate all of their knowledge into a final class, and the professors often include limits they might find in the real world (like the budget limit in this project). The requirement to thoroughly document the build process is also a lesson that more people could stand to learn. Senior design classes have attempted to solve a lot of life’s other problems, too; from autonomous vehicles to bartenders, there’s been a solution for almost every problem.