To read the IT press in the early 1990s, those far-off days just before the Web was the go-to source of information, was to be fed a rosy vision of a future in which desktop and server computing would be a unified and powerful experience. IBM and Apple would unite behind a new OS called Taligent that would run Apple, OS/2, and 16-bit Windows code, and coupled with UNIX-based servers, this would revolutionise computing.

We know that this never quite happened as prophesied, but along the way, it did deliver a few forgotten but interesting technologies. [Old VCR] has a look at one of these, a feature of the IBM AIX, which shipped with mid-90s Apple servers as a result of this partnership, in which Mac client applications could have server-side components, allowing them to offload computing power to the more powerful machine.

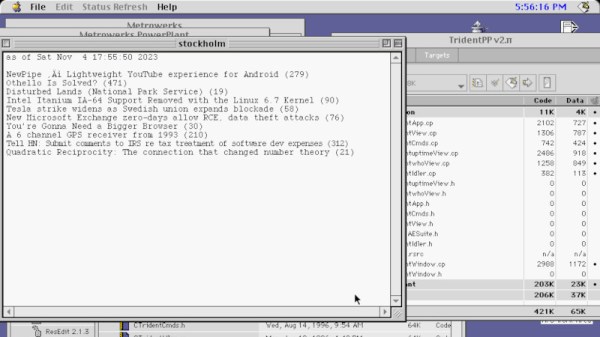

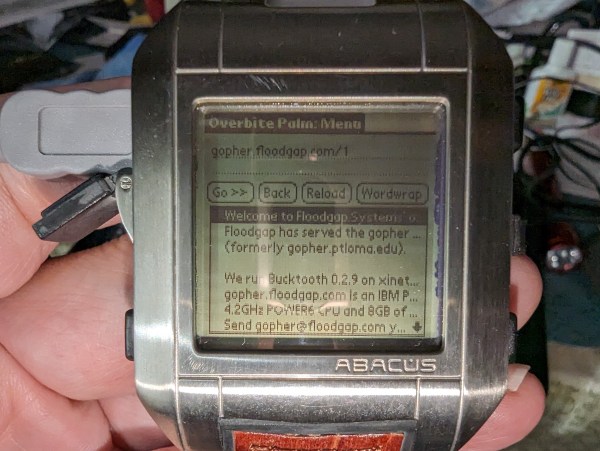

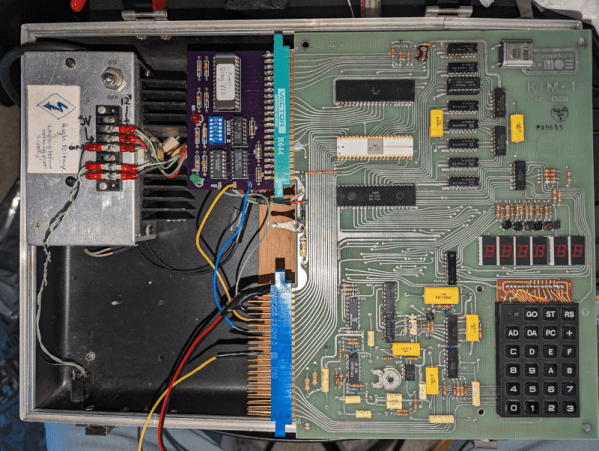

The full article is very long but full of interesting nuggets of forgotten 1990s computing history, but it’s a reminder that DOS/Windows and Novell Netware weren’t the only games in town. The Taligent/AIX combo never happened, but its legacy found its way into the subsequent products of both companies. By the middle of the decade, even Microsoft had famously been caught out by the rapid rise of the Web. He finishes off by creating a simple sample application using the server-side computing feature, a native Mac OS application that calls a server component to grab the latest Hacker News stories. Unexpectedly, this wasn’t the only 1990s venture from Apple involving another company’s operating system. Sometimes, you just want to run Doom.