Watching a child learn to run is a joyous, but sometimes painful experience. It seems the same is true for [James Bruton]’s impressive Sonic the Self-Balancing robot, even with bendable knees and force sensitive legs.

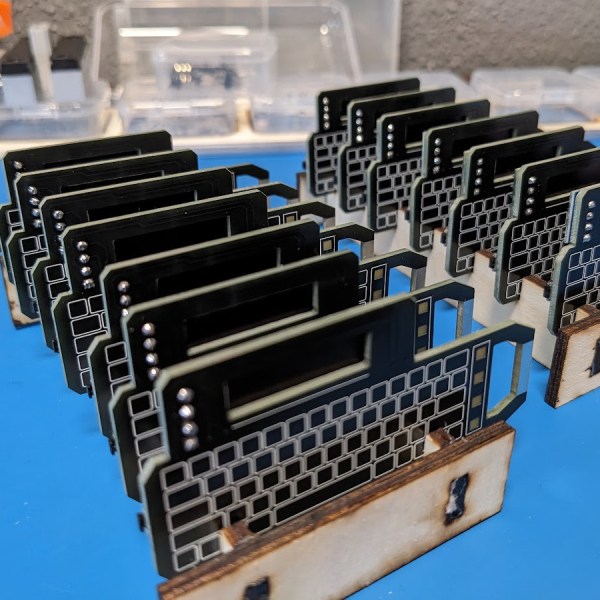

We covered the mechanical side of the project recently, and now [James] has added the electronics to turn it into a truly impressive working robot (videos after the break). Getting it to this point was not without challenges, but fortunately he is sharing the experience with us, wipe-outs and all. The knees of this robot are actuated using a pair of motors with ball screws, which are not back drivable. This means that external sensors are needed to allow the motors to actively respond to inputs, which in this case are load cells in the legs and an MPU6050 IMU for balancing. The main control board is a Teensy 3.6, with an NRF24 module providing remote control.

We covered the mechanical side of the project recently, and now [James] has added the electronics to turn it into a truly impressive working robot (videos after the break). Getting it to this point was not without challenges, but fortunately he is sharing the experience with us, wipe-outs and all. The knees of this robot are actuated using a pair of motors with ball screws, which are not back drivable. This means that external sensors are needed to allow the motors to actively respond to inputs, which in this case are load cells in the legs and an MPU6050 IMU for balancing. The main control board is a Teensy 3.6, with an NRF24 module providing remote control.

[James] wanted the robot to be able to lean into turns and handle uneven surfaces (small ramps) without tipping or falling over. The leaning part was fairly simple (for him), but the sensor integration for uneven surfaces turned out to be a real challenge, and required multiple iterations to get working. The first approach was to move the robot in the direction of the tipping motion to absorb it, and then return to level. However, this could cause it to tip over slightly larger ramps. When trying to keep the robot level while going over a ramp with one leg, it would go into wild side-to-side oscillations as it drops back to level ground. This was corrected by using the load cells to dampen the motion.