Watching the advancement of technology is interesting enough by looking at improved specifications for various components as the years go by. But clock speeds, memory size, and power consumption are all fairly intangible compared to actual implementation of modern technology when compared to days of yore. For example, this $40 microcontroller can do what a video game console was able to do in the 80s for a tenth of the (inflation adjusted) price.

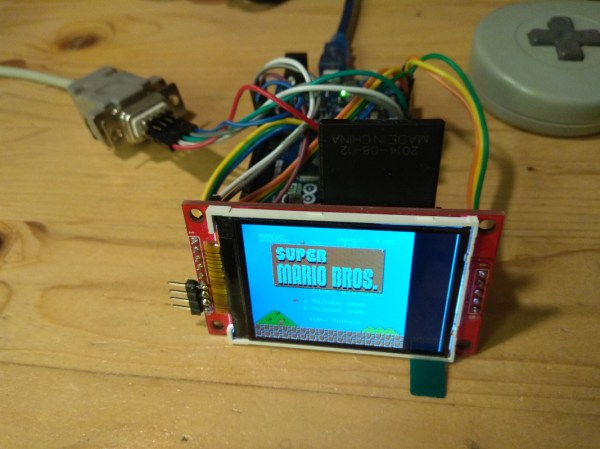

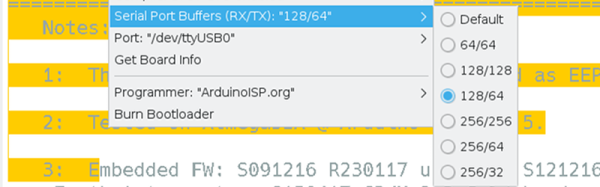

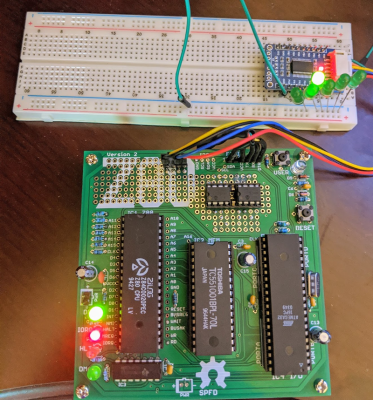

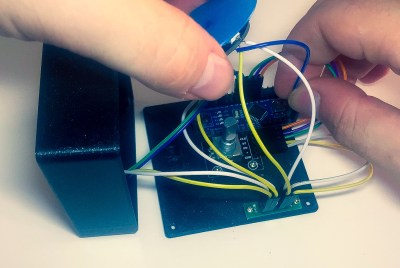

The NESDUE is an emulator for NES games which runs completely on an Arduino Due. The Arduino does have some limitations that have to be worked around to get the Nintendo to work, though. For one, it needs to be overclocked to be playable and it also needs a workaround to get past the memory limit of 96 kB of RAM. From there, a small screen is wired up along with a controller (from a Super Nintendo) and the gaming can begin.

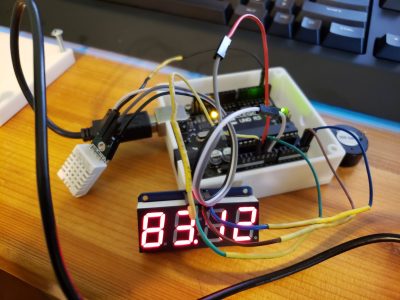

This is an impressive feat for an Arduino platform to accomplish, especially with the amount of memory tweaking that has to happen. This might be the most advanced gaming system available that runs everything on an Arduino, right up there with the Arduinocade which can provide an arcade-like experience straight from the Arduino as well.