It might be difficult for modern audiences to believe, but at one point Microsoft Windows fit on floppy disks. This was a simpler time, with smaller hard drives, lower resolution displays, and no hacker blogs for you to leave pessimistic comments on. A nearly unrecognizable era, to be sure. But if you’re one of the people who looks back on these days fondly, you might wonder why we don’t see this tiny graphical operating system smashed into modern hardware. After all, SkiFree sure ain’t gonna play itself.

Well, wonder no more. A hacker by the name of [redsPL] thought that Microsoft’s latest and greatest circa 1992 might do well crammed into the free space remaining on a ThinkPad X200’s firmware EEPROM. It would take a little fiddling, plus the small matter of convincing the BIOS to see the EEPROM as a virtual floppy drive, but clearly those are all minor inconveniences for anyone mad enough to boot their hardware into a nearly 30 year old copy of Visual Basic for a laugh.

The adventure starts when [redsPL] helped a friend install libreboot and coreboot on a stack of old ThinkPads by using the Raspberry Pi as an SPI flasher, a pastime we’re no strangers to ourselves. Once the somewhat finicky software and hardware environment was up and running, it seemed a waste not to utilize it further. Especially given the fact most firmware replacements only fill a fraction of the X200’s 8 MB chip.

Of course, Windows 3.1 was not designed for modern hardware and no proper drivers exist for much of it. Just getting the display resolution up to 1024×768 (and still with only 256 colors) required patching the original video drivers with ones designed for VMWare. [redsPL] wasn’t able to get the sound hardware working, but at least the PC speaker makes the occasional buzz. The last piece of the puzzle was messing around the zip and xz commands until the disk image was small enough to sneak onto the chip.

Believe it or not, this isn’t the first time we’ve seen Windows from this era running on a (relatively) modern ThinkPad. For whatever reason, these two legends of the computing world seem destined to keep running into each other.

[Thanks to Renard for the tip.]

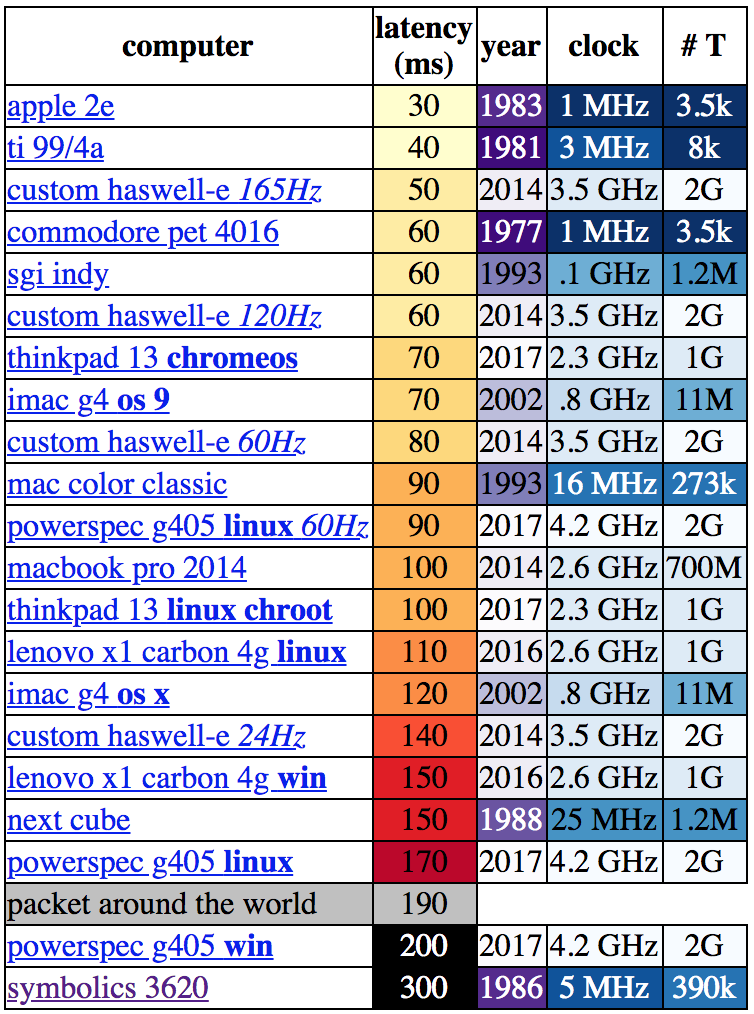

Let’s be clear about what “latency” means in this context. [danluu] was checking the time between a user input and some response on screen. For desktop systems he measured a keystroke, for mobile devices scrolling a browser. If you’re here on Hackaday (or maybe at a

Let’s be clear about what “latency” means in this context. [danluu] was checking the time between a user input and some response on screen. For desktop systems he measured a keystroke, for mobile devices scrolling a browser. If you’re here on Hackaday (or maybe at a