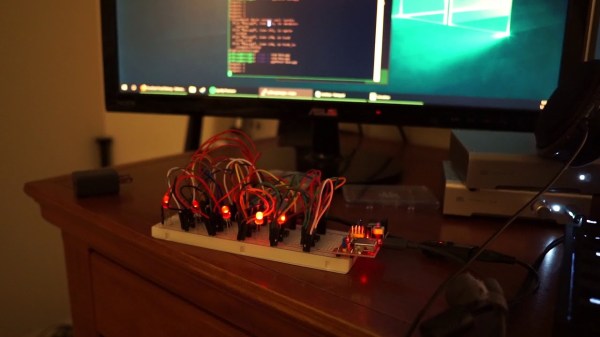

Do you ever look at the news, and wonder about the process behind the news cycle? I did, and for the last couple of decades it’s been the subject of one of my projects. The Raspberry Pi on my shelf runs my word trend analysis tool for news content, and since my journey from curious geek to having my own large corpus analysis system has taken twenty years it’s worth a second look.

How Career Turmoil Led To A Two Decade Project

In the middle of the 2000s I had come out of the dotcom crash mostly intact, and was working for a small web shop. When they went bust I was casting around as one does, and spent a while as a Google quality rater while I looked for a new permie job. These teams are employed by the search giant through temporary employment agencies, and in loose terms their job is to be the trained monkeys against whom the algorithm is tested. The algorithm chose X, and if the humans also chose X, the algorithm is probably getting it right. Being a quality rater is not in any way a high-profile job, but with the big shiny G on my CV I soon found myself in demand from web companies seeking some white-hat search engine marketing expertise. What I learned mirrored my lesson from a decade earlier in the CD-ROM business, that on the web as in any other electronic publishing medium, good content well presented has priority over any black-hat tricks.

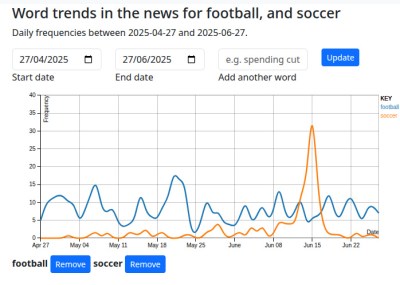

But what makes good content? Forget an obsession with stuffing bogus keywords in the text, and instead talk about the right things, and do it authoritatively. What are the right things in this context? If you are covering a subject, you need to do so using the right language; that which the majority uses rather than language only you use. I can think of a bunch of examples which I probably shouldn’t talk about, but an example close to home for me comes in cider. In the UK, cider is a fermented alcoholic drink made from apples, and as a craft cidermaker of many years standing I have a good grasp of its vocabulary. The accepted spelling is “Cider”, but there’s an alternate spelling of “Cyder” used by some commercial producers of the drink. It doesn’t take long to realise that online, hardly anyone uses cyder with a Y, and thus pages concentrating on that word will do less well than those talking about cider.

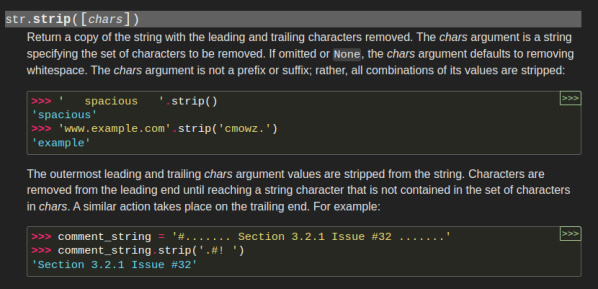

I started to build software to analyse language around a given topic, with the aim of discerning the metaphorical cider from the cyder. It was a great surprise a few years later to discover that I had invented for myself the already-existing field of computational linguistics, something that would have saved me a lot of time had I known about it when I began. I was taking a corpus of text and computing the frequencies and collocates (words that appear alongside each other) of the words within it, and from that I could quickly see which wording mattered around a subject, and which didn’t. This led seamlessly to an interest in what the same process would look like for news data with a time axis added, so I created a version which harvested its corpus from RSS feeds. Thus began my decades-long project.

Continue reading “Crunching The News For Fun And Little Profit”