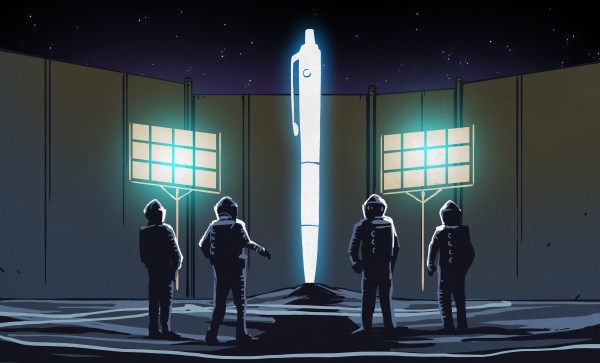

We’ve all heard of the Fisher Space Pen. Heck, there’s even an episode of Seinfeld that focuses on this fountain of ink, which is supposed to be ready for action no matter what you throw at it. The legend of the Fisher Space Pen says that it can and will write from any angle, in extreme temperatures, underwater, and most importantly, in zero gravity. While this technology is a definite prerequisite for astronauts in space, it has a long list of practical Earthbound applications as well (though it would be nice if it also wrote on any substrate).

You’ve probably heard the main myth of the Fisher Space Pen, which is that NASA spent millions to develop it, followed quickly by the accompanying joke that the Russian cosmonauts simply used pencils. The truth is, NASA had already tried pencils and decided that graphite particles were too much of an issue because they would potentially clog the instruments, like bags of ruffled potato chips and unsecured ant farms.

A Space-Worthy Instrument Indeed

Usually, it’s government agencies that advance technology, and then it trickles down to the consumer market at some point. But NASA didn’t develop the Space Pen. No government agency did. Paul Fisher of the Fisher Pen Company privately spent most of the 1960s working on a pressurized pen that didn’t require gravity in the hopes of getting NASA’s attention and business. It worked, and NASA motivated him to keep going until he was successful.

Then they tested the hell out of it in all possible positions, exposed it to extreme temperatures between -50 °F and 400 °F (-45 °C to 204 °C), and wrote legible laundry lists in atmospheres ranging from pure oxygen to a total vacuum. So, how does this marvel of engineering work?

The Fisher Space Pen’s ink cartridge is pressurized to 45 PSI with nitrogen, which keeps oxygen out in the same manner as potato chip bags. Inside is a particularly viscous, gel-like ink that turns to liquid when it meets up with friction from the precision-fit tungsten carbide ballpoint.

Between the viscosity and the precision fit of the ballpoint, the pen shouldn’t ever leak, but as you’ll see in the video below, (spoiler alert!) snapping an original Space Pen cartridge results in a quick flood of thick ooze as the ink is forced out by the nitrogen.

Continue reading “The Astronomical Promises Of The Fisher Space Pen”