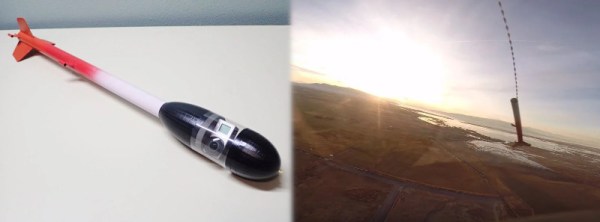

Launching model rockets is fun, but the real meat of the hobby lies in what you do next. Some choose to instrument their rockets or carry other advanced payloads. [seamster] likes to film his flights, and built a nosecone camera package to do so.

A GoPro is the camera of choice for [seamster]’s missions, with its action cam design making it easy to fire off with a single press of a button. To mount it on the rocket, the nosecone was designed in several sections. The top and bottom pieces are 3D printed, which are matched with a clear plastic cylinder cut from a soda bottle. Inside the cylinder, the GoPro and altimeter hardware are held in place with foam blocks, cut to shape from old floor mats. The rocket’s parachute is attached to the top of the nose cone, which allows the camera to hang in the correct orientation on both the ascent and descent phases of the flight. Check out the high-flying videos created with this setup after the break.

It’s a simple design that [seamster] was able to whip up in Tinkercad in just a few hours, and one that’s easily replicable by the average maker at home. Getting your feet wet with filming your flights has never been easier – we’ve certainly come a long way from shooting on film in the 1970s.

Continue reading “A Cheap And Easy GoPro Mount For Model Rocketry”