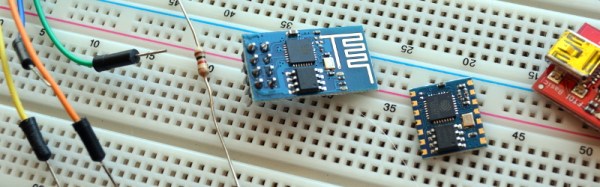

The ESP8266 is the reigning WiFi wonderchip, quickly securing its reputation as the go-to platform for an entire ecosystem of wireless devices. There’s nothing that beats the ESP8266 on a capability vs. price comparison, and this tiny chip is even finding its way into commercial products. It’s also a fantastic device for the hardware tinkerer, leading to thousands of homebrew projects revolving around this tiny magical device.

In every technical document, summary, and description of the ESP8266, the ESP8266 is said to be a 3.3V part. While we’re well into the age of 3.3V logic, there are still an incredible number of boards and hardware that still operate using 5V logic. Over on the Hackaday.io stack, [Radomir] is questioning this basic assumption. He’s wondering if the ESP8266 is 5V tolerant after all. If it is, great. We don’t need level converters, and interfacing the ESP to USB TTL serial adapters becomes much easier. Yes, you’ll still need to use a regulator if the rest of your project is running at 5V, but if the pins are 5V tolerant, interfacing the ESP8266 with a variety of hardware becomes very easy.

[Radomir]’s evidence for the possibility of 5V tolerant inputs comes from a slight difference in the official datasheet from Espressif, and the datasheet translated by the community before Espressif realized how many of these chips they were going to sell.

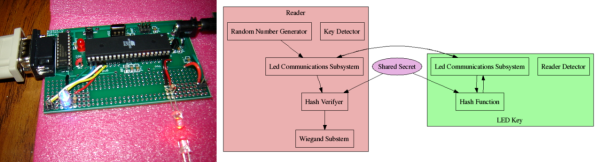

The best evidence of 5V tolerant pins might come from real-world experience — if you can drive a pin with 5V for months on end without it failing, there might be something to this claim. It’s not definitive, though; just because a device will work with 5V input pins for a few months doesn’t mean it won’t fail in the future. So far a few people have spoken up and presented ESPs directly connected to the 5V pin of an Arduino that still work after months of service. If this is evidence of 5V tolerant design or simply luck is another matter entirely.

While the official datasheet from Espressif lists a maximum VIH of 3.3V, maximum specs rarely are true maximums — you can always push a part harder without things flying apart at the seams. Unfortunately, unless we hear something from the engineers at Espressif, we won’t know if the ESP8266 was designed to be 5V tolerant, if it can handle 5V signals reliably, or if 5V signals are a really good way to kill a chip eventually.

Lucky for us — and this brings us to the entire point of an Ask Hackaday column — a few Espressif engineers read Hackaday. They’re welcome to pseudonymously chime in below along with the rest of the peanut gallery. Failing that, the ESP8266 has been decapped; are there any die inspection wizards who can back up a claim of 5V tolerance for the GPIO? We’d also be interested in hearing any ideas for stress testing pin tolerance.