There is a certain magic and uniqueness to hardware, particularly when it comes to audio. Tube amplifiers are well-known and well-loved by audio enthusiasts and musicians alike. However, that uniqueness also comes with the price of the fact that gear takes up space and cannot be configured outside the bounds of what it was designed to do. [keyth72] has decided to take it upon themselves to recreate the smooth sound of the Fenders Blues Jr. small tube guitar amp. But rather than using hardware or standard audio software, the magic of AI was thrown at it.

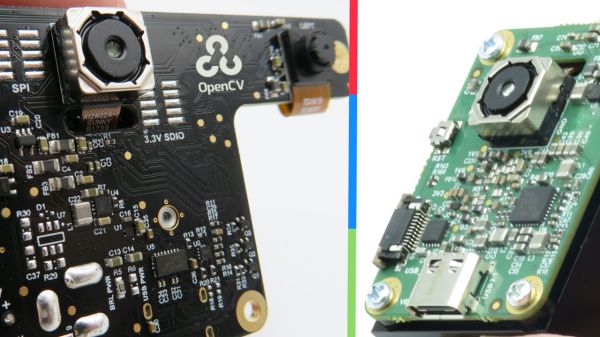

In some ways, recreating a transformation is exactly what AI is designed for. There’s a clear and recordable input with a similar output. In this case, [keyth72] recorded several guitar sessions with the guitar audio sent through the device they wanted to recreate. Using WaveNet, they created a model that applies the transform to input audio in real-time. The Gain and EQ knobs were handled outside the model itself to keep things simple. Instructions on how to train your own model are included on the GitHub page.

While the model is simply approximating the real hardware, it still sounds quite impressive, and perhaps the next time you need a particular sound of your home-built amp or guitar pedal, you might reach for your computer instead.

Continue reading “Tube Amp Is Modeled With The Power Of AI”