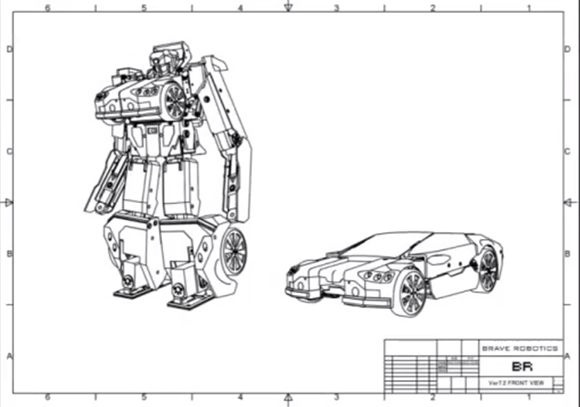

After nearly 30 years since the first episode of Transformers aired, someone has finally done it. A company named Brave Robotics out of Japan has created a true transformer robot that is half remote control car and half remote control bipedal robot.

According to the Brave Robotics’ site, this creation is the result of more than 10 years. In 2002, the first version of the Transform Robot was completed – a relatively simple affair that transformed but couldn’t walk or drive. Over the last 10 years, the prototypes have seen incremental improvement that included a drive system for the wheels, a steering mechanism, and even the ability to move its’ arms and shoot plastic darts.

Surprisingly, you can actually buy one of Brave Robotics’ transforming robots for ¥1,980,000 JPY, or about $24,000 USD. A little pricy but we’re sure we’ll see a few more transforming robots in the future.

Check out a few more videos of the Brave Robotics transform robot after the break.