It seems as though more and more of the simple command-line tools and small scripts that used to be bash or small c programs are slowly turning into python programs. Of course, we will just have to wait and see if this ultimately turns out to be a good idea. But in the meantime, next time you’re revamping or writing a new tool, why not spice it up with Rich?

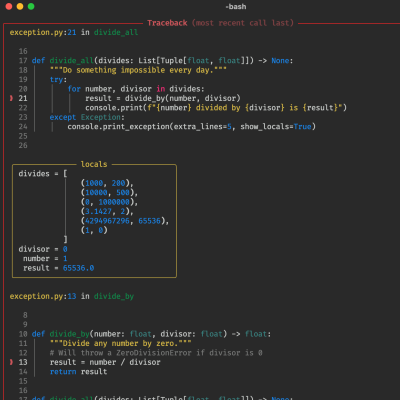

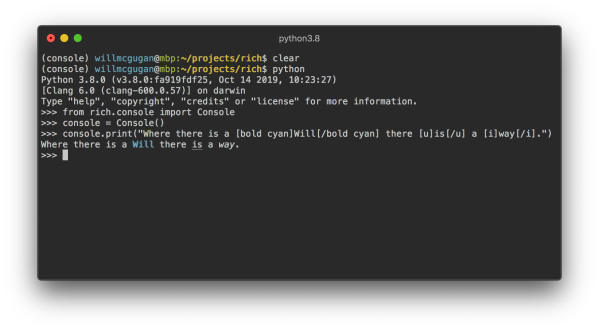

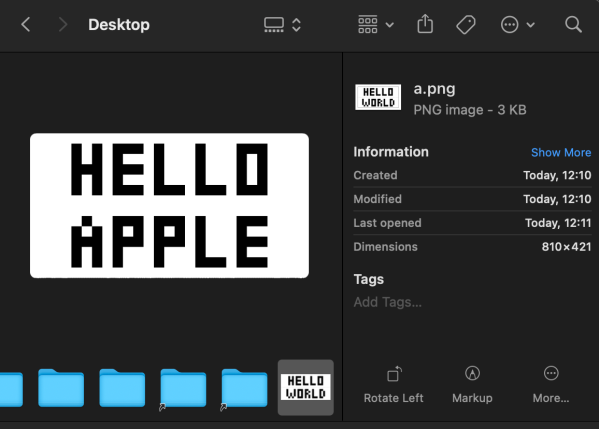

Rich is a python library written by [Will McGugan] that offers text formatting, colors, graphs, progress bars, markdown, syntax highlighting, charts, and more through the power of ANSI codes. The best part is that it works with macOS, Windows, and Linux. In addition, it offers logging solutions that work out of the box. One of the best features of Rich is the inspect functionality. You can pass in an object, and it will use reflection to print a beautiful chart detailing what exactly the object is, helpful in debugging. The other feature is the traceback, which shows a formatted and annotated snapshot of relevant code on the stack during exceptions.

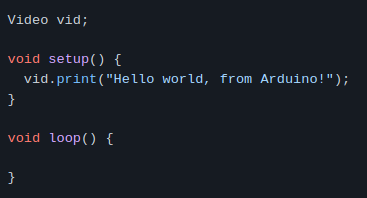

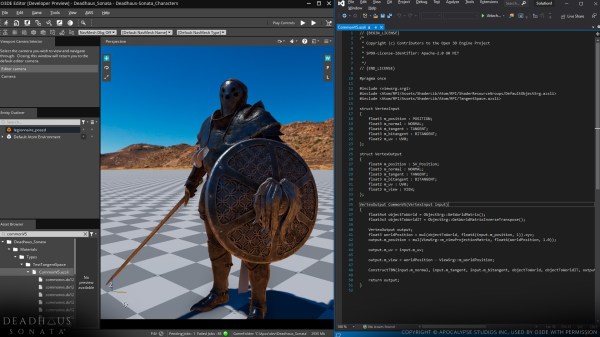

The source itself is well-written python with comments and typing information. There’s a good chance you’ll pick up a trick or two reading through it. Rich is used to build Textual (also by [Will]), which aims to be a GUI API that runs in the terminal. It served as an excellent example of what Rich is capable of. It is incredible how long these protocols have been around. [Will] even ran Rich on a Teletype Model 33. If you’re working with a bit more of a constrained environment, why not bring some color to your Arduino serial terminal?

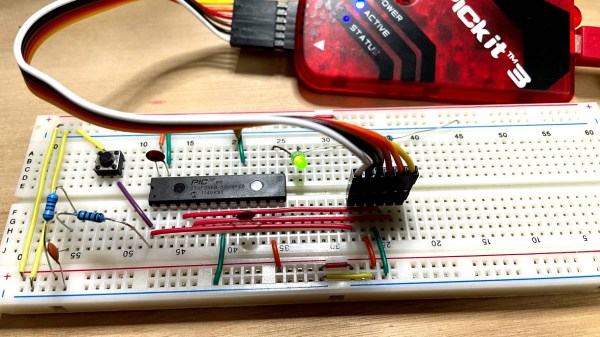

[Harry Gill] has you covered with

[Harry Gill] has you covered with