[Gabriel] picked up a GoPro to document his adventures on the slopes and trails of Montreal, but quickly found he was better in front of the camera than behind it. Turns out he’s even better seated behind his workbench, as the completely custom auto-tracking gimbal he came up with is nothing short of a work of art.

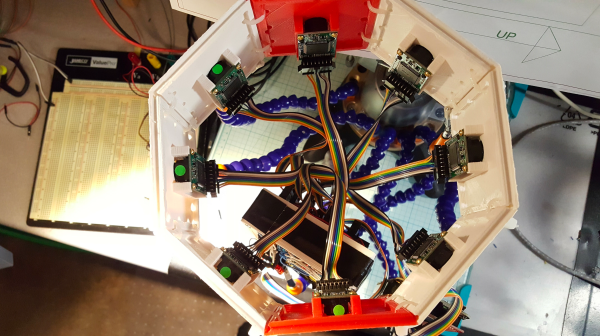

There’s quite a bit going on here, and as you might expect, it took several iterations before [Gabriel] got all the parts working together. The rather GLaDOS-looking body of the gimbal is entirely 3D printed, and holds the motors, camera, and a collection of ultrasonic receivers. The Nvidia Jetson TX1 that does the computational heavy lifting is riding shotgun in its own swanky looking 3D printed enclosure, but [Gabriel] notes a future revision of the hardware should be able to reunite them.

In the current version of the system, the target wears an ultrasonic emitter that is picked up by the sensors in the gimbal. The rough position information provided by the ultrasonics is then refined by the neural network running on the Jetson TX1 so that the camera is always focused on the moving object. Right now the Jetson TX1 gets the video feed from the camera over WiFi, and commands the gimbal hardware over Bluetooth. Once the Jetson is inside the gimbal however, some of the hardware can likely be directly connected, and [Gabriel] says the ultrasonics may be deleted from the design completely in favor of tracking purely in software. He plans on open sourcing the project, but says he’s got some internal house keeping to do before he takes the wraps off it.

From bare bones to cushy luxury, scratch-built camera gimbals have become something of a right of passage for the photography hacker. But with this project, it looks like the bar got set just a bit higher.