By now we’ve all seen articles where the entire copy has been written by ChatGPT. It’s essentially a trope of its own at this point, so we will start out by assuring you that this article is being written by a human. AI tools do seem poised to be extremely disruptive to certain industries, though, but this doesn’t necessarily have to be a bad thing as long as they continue to be viewed as tools, rather than direct replacements. ChatGPT can be used to assist in plenty of tasks, and can help augment processes like programming (rather than becoming the programmer itself), and this article shows a few examples of what it might be used for.

While it can write some programs on its own, in some cases quite capably, for specialized or complex tasks it might not be quite up to the challenge yet. It will often appear extremely confident in its solutions even if it’s providing poor or false information, though, but that doesn’t mean it can’t or shouldn’t be used at all.

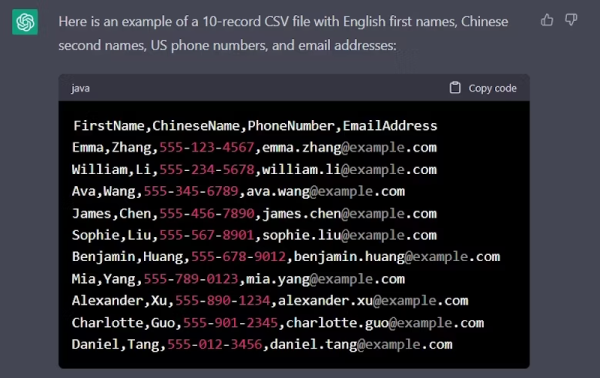

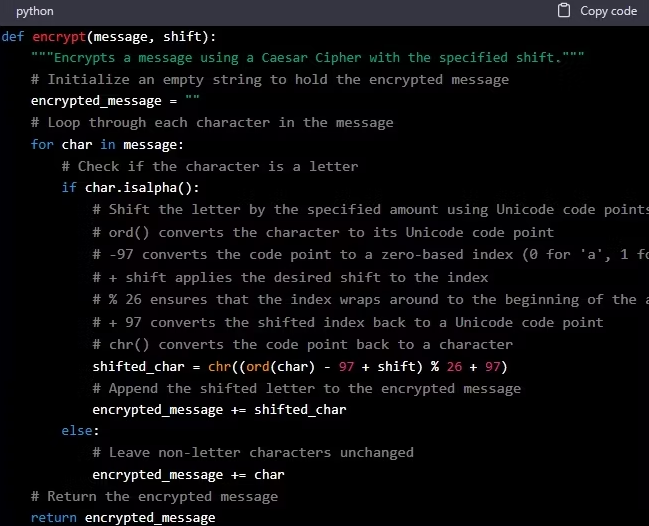

The article goes over a few of the ways it can function more as an assistant than a programmer, including generating filler content for something like an SQL database, converting data from one format to another, converting programs from one language to another, and even help with a program’s debugging process.

Some other things that ChatGPT can be used for that we’ve been able to come up with include asking for recommendations for libraries we didn’t know existed, as well as asking for music recommendations to play in the background while working. Tools like these are extremely impressive, and while they likely aren’t taking over anyone’s job right now, that might not always be the case.