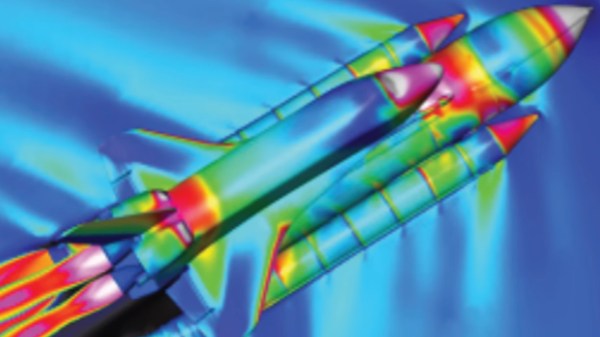

The History Guy on YouTube has posted an interesting video on the history of the supercomputer, with a specific focus on their use by NASA for the implementation of computational fluid dynamics (CFD) models of aeronautical assemblies.

The aero designers of the day were quickly finding out the limitations of the wind tunnel testing approach, especially for so-called transonic flow conditions. This occurs when an object moving through a fluid (like air can be modeled) produces regions of supersonic flow mixed in with subsonic flow and makes for additional drag scenarios. This severely impacts aircraft performance. Not accounting for these effects is not an option, hence the great industry interest in CFD modeling. But the equations for which (usually based around the Navier-Stokes system) are non-linear, and extremely computationally intensive.

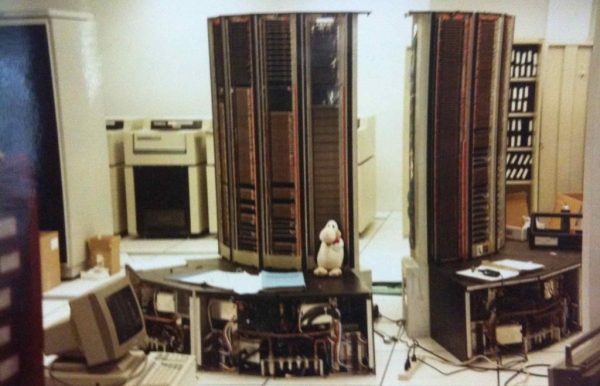

Obviously, a certain Mr. Cray is a prominent player in this story, who, as the story goes, exhausted the financial tolerance of his employer, CDC, and subsequently formed Cray Research Inc, and the rest is (an interesting) history. Many Cray machines were instrumental in the development of the space program, and now adorn computing museums the world over. You simply haven’t lived until you’ve sipped your weak lemon drink whilst sitting on the ‘bench’ around an early Cray machine.

Obviously, a certain Mr. Cray is a prominent player in this story, who, as the story goes, exhausted the financial tolerance of his employer, CDC, and subsequently formed Cray Research Inc, and the rest is (an interesting) history. Many Cray machines were instrumental in the development of the space program, and now adorn computing museums the world over. You simply haven’t lived until you’ve sipped your weak lemon drink whilst sitting on the ‘bench’ around an early Cray machine.

You see, supercomputers are a different beast from those machines mere mortals have access to, or at least the earlier ones were. The focus is on pure performance, ideally for floating-point computation, with cost far less of a concern, than getting to the next computational milestone. The Cray-1 for example, is a 64-bit machine capable of 80 MIPS scalar performance (whilst eating over 100 kW of juice), and some very limited parallel processing ability.

While this was immensely faster than anything else available at the time, the modern approach to supercomputing is less about fancy processor design and more about the massive use of parallelism of existing chips with lots of local fast storage mixed in. Every hacker out there should experience these old machines if they can, because the tricks they used and the lengths the designers went to get squeeze out every ounce of processing grunt, can be a real eye-opener.

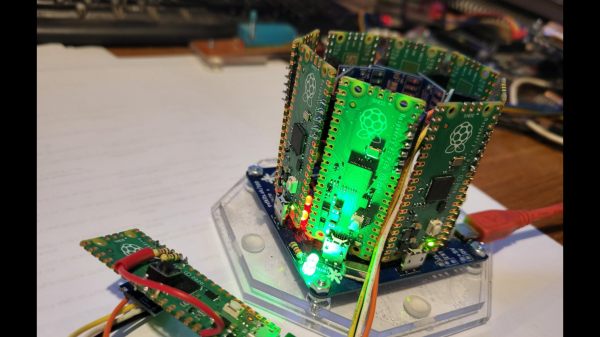

Want to see what happens when you really push out the boat and use the whole wafer for parallel computation? Checkout the Cerberus. If your needs are somewhat less, but dabbling in parallel computing gets you all pumped, you could build a small array out of Pine64s. Finally, the story wouldn’t be complete without talking about the life and sad early demise of Seymour Cray.

Continue reading “A History Of NASA Supercomputers, Among Others” →