It’s generally understood that most vehicles that humans interact with on a daily basis are used with some kind of hand controlled interface. However, this build from [Avisha Kumar] and [Leul Tesfaye] showcases a rather different take. A single motion input provides both steer and foward/reverse throttle control.

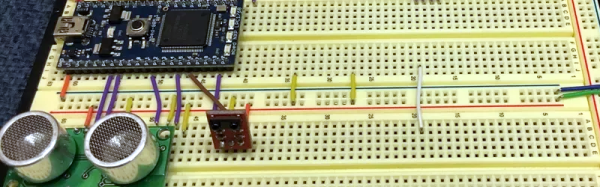

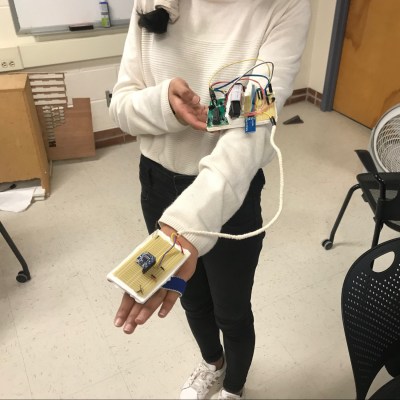

The project consists of a small car, driven with electric motors at the rear, with a servo-controlled caster at the front for steering. Controlled is provided through PIC32 microcontroller receiving signals via Bluetooth. The car is commanded with a hand controller, quite literally — consisting of an accelerometer measuring pitch and roll position of the user’s hand. By tilting the hand left and right affects steering, while the hand is rotated fore and aft for throttle control. Video after the break.

The project was built for a course at Cornell University, and thus is particularly well documented. It provides a nice example of reading sensor inputs and transmitting/receiving data. The actually microcontroller used is less important than the basic demonstration of “Hello World” with robotics concepts. Keep this one in your back pocket for the next time you want to take a new chip for a spin!

We’ve seen similar work before, with a handmade controller using just potentiometers and weights. Continue reading “A Different Kind Of Hand Controlled Vehicle”