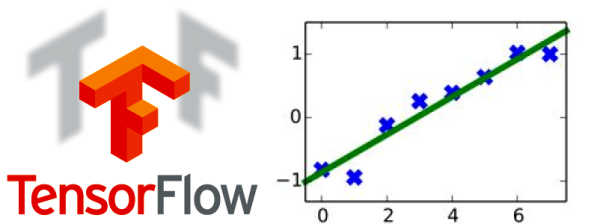

If you’ve looked at machine learning, you may have noticed that a lot of the examples are interesting but hard to follow. That’s why [Jostmey] created Naked Tensor, a bare-minimum example of using TensorFlow. The example is simple, just doing some straight line fits on some data points. One example shows how it is done in series, one in parallel, and another for an 8-million point dataset. All the code is in Python.

If you haven’t run into it yet, TensorFlow is an open source library from Google. To quote from its website:

TensorFlow is an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them. The flexible architecture allows you to deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device with a single API. TensorFlow was originally developed by researchers and engineers working on the Google Brain Team within Google’s Machine Intelligence research organization for the purposes of conducting machine learning and deep neural networks research, but the system is general enough to be applicable in a wide variety of other domains as well.