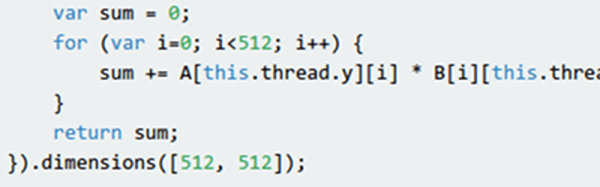

Everyone knows that writing programs that exploit the GPU (Graphics Processing Unit) in your computer’s video card requires special arcane tools, right? Well, thanks to [Matthew Saw], [Fazil Sapuan], and [Cheah Eugene], perhaps not. At a hackathon, they turned out a Javascript library that allows you to create “kernel” functions to execute on the GPU of the target system. There’s a demo available with a benchmark which on our machine sped up a 512×512 calculation by well over five times. You can download the library from the same page. There’s also a GitHub page.

The documentation is a bit sparse but readable. You simply define the function you want to execute and the dimensions of the problem. You can specify one, two, or three dimensions, as suits your problem space. When you execute the associated function it will try to run the kernels on your GPU in parallel. If it can’t, it will still get the right answer, just slowly.