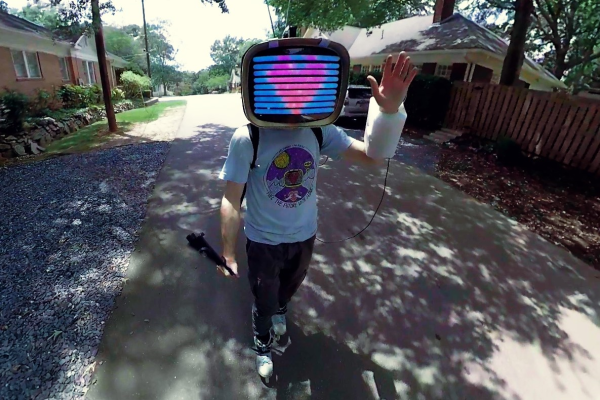

Gesture recognition and machine learning are getting a lot of air time these days, as people understand them more and begin to develop methods to implement them on many different platforms. Of course this allows easier access to people who can make use of the new tools beyond strictly academic or business environments. For example, rollerblading down the streets of Atlanta with a gesture-recognizing, streaming TV that [nate.damen] wears over his head.

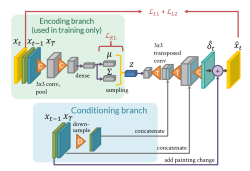

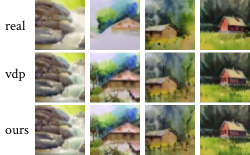

He’s known as [atltvhead] and the TV he wears has a functional LED screen on the front. The whole setup reminds us a little of Deep Thought. The screen can display various animations which are controlled through Twitch chat as he streams his journeys around town. He wanted to add a little more interaction to the animations though and simplify his user interface, so he set up a gesture-sensing sleeve which can augment the animations based on how he’s moving his arm. He uses an Arduino in the arm sensor as well as a Raspberry Pi in the backpack to tie it all together, and he goes deep in the weeds explaining how to use Tensorflow to recognize the gestures. The video linked below shows a lot of his training runs for the machine learning system he used as well.

[nate.damen] didn’t stop at the cheerful TV head either. He also wears a backpack that displays uplifting messages to people as he passes them by on his rollerblades, not wanting to leave out those who don’t get to see him coming. We think this is a great uplifting project, and the amount of work that went into getting the gesture recognition machine learning algorithm right is impressive on its own. If you’re new to Tensorflow, though, we have featured some projects that can do reliable object recognition using little more than a Raspberry Pi and a camera.

Continue reading “Generate Positivity With Machine Learning”