There are plenty of “smart” toys out in the marketplace, some with more features than others. Nevertheless, most makers desire complete control over a platform, something that’s often lacking in any commercial offering. It was just this desire that motivated [MrDreamBot] to start hacking the Meccano Max.

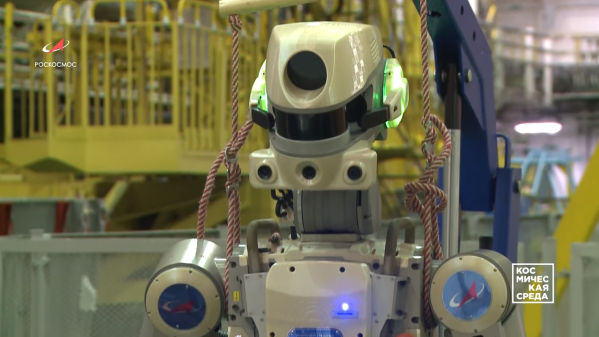

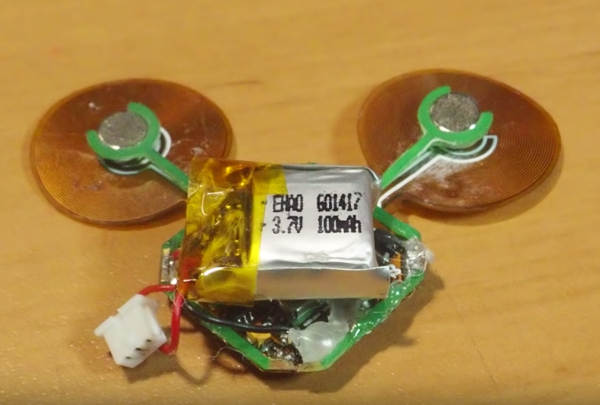

Meccano Max is a small-statured companion robot, at about 30 centimeters high. Not content with the lack of an API, [MrDreamBot] decided to first experiment with creating an Arduino library to run Max’s hardware. With this completed, work then began on integrating a Hicat Livera devboard into the hardware. This is an embedded Linux system with Arduino compatibility, as well as the ability to stream video and connect over WiFi. Thus far, it’s possible to control Max through a browser, while viewing a live video feed from the ‘bot. It’s also possible to customize the expressions displayed on Max’s face.

Oftentimes, it pays to replace stock hardware rather than try and work with the limitations of the original setup, and this project is no exception. With that said, we’re still hoping someone out there will find a way to get Jibo back online. Look after your robot friends! Video after the break.