While the concept of automotive “autopilots” are still in their infancy, pretty much any aircraft larger than an ultralight will have some mechanism to at least hold a fixed course and altitude. Typically the autopilot system is built into the airplane’s controls, but this new system replaces the pilot themselves in a manner reminiscent of the movie Airplane.

The robot pilot, known as PIBOT, uses both AI and robotics technology to fly the airplane without altering the aircraft. Unlike a normal autopilot system, this one can be fed the aircraft’s manuals in natural language, understand them, and use that information to fly the airplane. That includes operating any of the aircraft’s cockpit controls, not just the control column and pedal assembly. Supposedly, the autopilot can handle everything from takeoff to landing, and operate capably during heavy turbulence.

The Korea Advanced Institute of Science and Technology (KAIST) research team that built the machine hopes that it will pave the way for more advanced autopilot systems, and although this one has only been tested in simulators so far it shows enormous promise, and even has certain capabilities that go far beyond human pilots’ abilities including the ability to remember a much wider variety of charts. The team also hopes to eventually migrate the technology to the land, especially military vehicles, although we’ve seen how challenging that can be already.

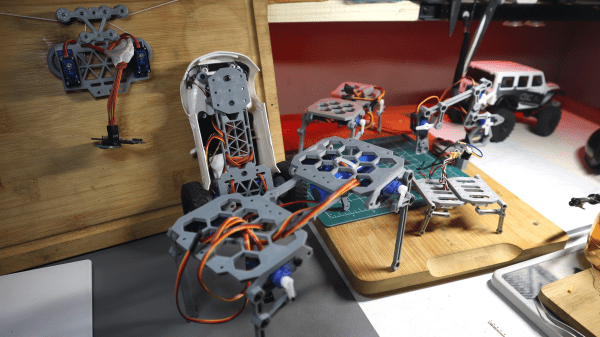

nRF24L01 boards and build yourself a copy of the remote control [saul] handily provides in

nRF24L01 boards and build yourself a copy of the remote control [saul] handily provides in